News

A short statement on the Heartbleed problem and its impact on common Internet users.

On the 7th of April 2014 a team of security engineers (Riku, Antti and Matti) at Codenomicon and Neel Mehta of Google Security published information on a security issue in OpenSSL. OpenSSL is a piece of software used in the encryption process; it helps you in coding your computer traffic to ensure unauthorized people cannot understand what you are sending from one computer network to another. It is used in many applications: for example if you use on-line banking websites, code such as OpenSSL helps to ensure that your PIN code remains secret.

The information that was released caused great turmoil in the security community, and many panic buttons were pressed because of the wide-spread use of OpenSSL. If you are using a computer and the Internet you might be impacted: people at home just as much as major corporations. OpenSSL is used for example in web, e-mail and VPN servers and even in some security appliances. However, the fact that you have been impacted does not mean you can no longer use your PC or any of its applications. You may be a little more vulnerable, but the end of the world may still be further than you think. First of all some media reported on the “Heartbleed virus”. Heartbleed is in fact not a virus at all. You cannot be infected with it and you cannot protect against being infected. Instead it is an error in the computer programming code for specific OpenSSL versions (not all) which a hacker could potentially use to obtain information from the server (which could possibly include passwords and encryption keys, along with other random data in the server’s memory) potentially allowing him to break into a system or account.

Luckily, most applications in which OpenSSL is used, rely on more security measures than only OpenSSL. Most banks for instance continuously work to remain abreast of security issues, and have implemented several measures that lower the risk this vulnerability poses. An example of such a protective measure is transaction signing with an off-line card reader or other forms of two –factor authentication. Typically exploiting the vulnerability on its own will not allow an attacker post fraudulent transactions if you are using two-factor authentication or an offline token generator for transaction signing.

So in summary, does the Heartbleed vulnerability affect end-users? Yes, but not dramatically. A lot of the risk to the end-users can be lowered by following common-sense security principles:

- Regularly change your on-line passwords (as soon as the websites you use let you know they have updated their software, this is worthwhile, but it should be part of your regular activity)

- Ideally, do not use the same password for two on-line websites or applications

- Keep the software on your computer up-to-date.

- Do not perform on-line transactions on a public network (e.g. WiFi hotspots in an airport). Anyone could be trying to listen in.

Security Stack Exchange has a wide range of questions on Heartbleed ranging from detail on how it works to how to explain it to non-technical friends.

Authors: Ben Van Erck, Lucas Kauffman

How can you protect yourself from CRIME, BEAST’s successor?

For those who haven’t been following Juliano Rizzo and Thai Duong, two researchers who developed the BEAST attack against TLS 1.0/SSL 3.0 in September 2011, they have developed another attack they plan to publish at the Ekoparty conference in Argentina later this month – this time giving them the ability to hijack HTTPS sessions – and this has started people worrying again.

Security Stack Exchange member Kyle Rozendo asked this question:

With the advent of CRIME, BEASTs successor, what is possible protection is available for an individual and / or system owner in order to protect themselves and their users against this new attack on TLS?

And the community expectation was that we wouldn’t get an answer until Rizzo and Duong presented their attack.

However, our highest reputation member, Thomas Pornin delivered this awesome hypothesis, which I will quote here verbatim:

This attack is supposed to be presented in 10 days from now, but my guess is that they use compression.

SSL/TLS optionally supports data compression. In the ClientHello message, the client states the list of compression algorithms that it knows of, and the server responds, in the ServerHello, with the compression algorithm that will be used. Compression algorithms are specified by one-byte identifiers, and TLS 1.2 (RFC 5246) defines only the null compression method (i.e. no compression at all). Other documents specify compression methods, in particular RFC 3749 which defines compression method 1, based on DEFLATE, the LZ77-derivative which is at the core of the GZip format and also modern Zip archives. When compression is used, it is applied on all the transferred data, as a long stream. In particular, when used with HTTPS, compression is applied on all the successive HTTP requests in the stream, header included. DEFLATE works by locating repeated subsequences of bytes.

Suppose that the attacker is some Javascript code which can send arbitrary requests to a target site (e.g. a bank) and runs on the attacked machine; the browser will send these requests with the user’s cookie for that bank — the cookie value that the attacker is after. Also, let’s suppose that the attacker can observe the traffic between the user’s machine and the bank (plausibly, the attacker has access to the same LAN of WiFi hotspot than the victim; or he has hijacked a router somewhere on the path, possibly close to the bank server).

For this example, we suppose that the cookie in each HTTP request looks like this:

> Cookie: secret=7xc89f+94/wa

The attacker knows the “Cookie: secret=” part and wishes to obtain the secret value. So he instructs his Javascript code to issue a request containing in the body the sequence “Cookie: secret=0”. The HTTP request will look like this:

POST / HTTP/1.1 Host: thebankserver.com (…) Cookie: secret=7xc89f+94/wa (…)

Cookie: secret=0

When DEFLATE sees that, it will recognize the repeated “Cookie: secret=” sequence and represent the second instance with a very short token (one which states “previous sequence has length 15 and was located n bytes in the past); DEFLATE will have to emit an extra token for the ‘0’.

The request goes to the server. From the outside, the eavesdropping part of the attacker sees an opaque blob (SSL encrypts the data) but he can see the blob length (with byte granularity when the connection uses RC4; with block ciphers there is a bit of padding, but the attacker can adjust the contents of his requests so that he may phase with block boundaries, so, in practice, the attacker can know the length of the compressed request).

Now, the attacker tries again, with “Cookie: secret=1” in the request body. Then, “Cookie: secret=2”, and so on. All these requests will compress to the same size (almost — there are subtleties with Huffman codes as used in DEFLATE), except the one which contains “Cookie: secret=7”, which compresses better (16 bytes of repeated subsequence instead of 15), and thus will be shorter. The attacker sees that. Therefore, in a few dozen requests, the attacker has guessed the first byte of the secret value.

He then just has to repeat the process (“Cookie: secret=70”, “Cookie: secret=71”, and so on) and obtain, byte by byte, the complete secret.

What I describe above is what I thought of when I read the article, which talks about “information leak” from an “optional feature”. I cannot know for sure that what will be published as the CRIME attack is really based upon compression. However, I do not see how the attack on compression cannot work. Therefore, regardless of whether CRIME turns out to abuse compression or be something completely different, you should turn off compression support from your client (or your server).

Note that I am talking about compression at the SSL level. HTTP also includes optional compression, but this one applies only to the body of the requests and responses, not the header, and thus does not cover the Cookie: header line. HTTP-level compression is fine.

(It is a shame to have to remove SSL compression, because it is very useful to lower bandwidth requirements, especially when a site contains many small pictures or is Ajax-heavy with many small requests, all beginning with extremely similar versions of a mammoth HTTP header. It would be better if the security model of Javascript was fixed to prevent malicious code from sending arbitrary requests to a bank server; I am not sure it is easy, though.)

As bobince commented:

I hope CRIME is this and we don’t have two vulns of this size in play! However, I wouldn’t say that being limited to entity bodies makes HTTP-level compression safe in general… whilst a cookie header is an obvious first choice of attack, there is potentially sensitive material in the body too. eg Imagine sniffing an anti-XSRF token from response body by causing the browser to send fields that get reflected in that response.

It is reassuring that there is a fix, and my recommendation would be for everyone to assess the risk to them of having sessions hijacked and seriously consider disabling SSL compression support.

QOTW #32 – How can mini-computers (like Raspberry Pi) be applied to IT security?

This week’s question is nominated by me: How can mini-computers (like Raspberry Pi) be applied to IT security?

There are 2 parts to the question.

1) What practical applications such mini-computers have in IT security, or what role they could play in cyber-defense, network analysis, etc.

2) What risks might they introduce to a local network environment that should be considered?

The top rated answer by Tobias, suggest that mini computers like the Raspberry Pi could be used as cheap penetration testing tools, linking to the Pwnpi distribution, a specialized linux distro for the Raspberry Pi. The answer also links to the Pwnplug, a small compact penetration testing drop box that has a full suite of penetration testing tools.

The answer by Rofls reinforces the answer by Tobius, stating the mini-computers like the Raspberry Pi could be used as cheap, disposable hardware for hacking when coupled with a USB Wireless Adapter. The hacker would only lose a small amount of money if the Raspberry Pi was discovered.

The answer by Diarmaid links to IPFire, which can turn the Raspberry Pi into a cheap.firewall or IDS/IPS.

adamo also suggest that the Pi can be used to build cheap sensor networks to monitor Wifi, Ethernet or Bluetooth. He also suggest that the Pi can be used as emergency DHCP or DNS servers in situations where a quick fix is needed.

The Raspberry Pi is an example of a growing class of cheap hardware that can be used for hacking and penetration testing purposes. HakShop has a great set of cheap hardware that can perform task that used to require dedicated equipment costing thousands of dollars. This makes the threat of hacking even more prevalent as more users are able to afford the hardware needed to perform specialised task.

This is clearly going to present a new and interesting challenge for security professionals to defend against. I look forward to seeing what solutions the many bright minds in this industry can come out with.

Liked this question of the week? Interested in reading it or adding an answer? See the question in full. Have questions of a security nature of your own? Security expert and want to help others? Come and join us at security.stackexchange.com.

SOPA – what does it all mean

You may have noticed all the blacked out sites on the Internet on the 18th of January, but possibly aren’t aware of why they were doing this. The answer is SOPA – the poorly named “Stop Online Piracy Act”

In itself, that sounds fine, right? We want to cut piracy so this must be good. Well, no.

We already have laws in many countries which already allow us to take down websites hosting pirated content, but in many countries the process is one of “Innocent until proven guilty” – this means evidence needs to be provided, legal processes have to take place, and a court can rule that a website must be shutdown. The DMCA lets the US do that.

What SOPA does is change the balance to “Guilty until proven innocent” – which means that a website can be taken down just because of an allegation of copyright infringement.

For StackExchange, for example, occasionally people post plagiarised content. We rely on the community flagging this content, and moderators then remove it as fast as possible. (Look at Joel’s answer to this question to see the full process under DMCA and how this protects StackExchange legally). If SOPA was in force, StackExchange would be in danger of being removed from the Internet for any violation as it would be seen as guilty.

And this holds true for websites large and small – personal blogs, comics, Wikipedia, Reddit, Google and many others.

What is also very worrying is the US’s history of going after individuals in other countries citing US law, when those individuals have no connection to the US. See Richard O’Dwyer’s case – while what he did may have been used for nefarious purposes, all he really provided was links to other websites, and yet the US have forced the UK to extradite him. With a significant portion of the Internet under some US control, it wouldn’t be stretching the truth to assume this US bill would affect many other countries.

Another outcome if SOPA came into force would be an acceleration of businesses away from networks owned or controlled by the US, which may directly lead to greater piracy threats.

The Electronic Frontier Foundation – a strong force for free speech on the Internet has this handy guide to SOPA.

So why didn’t StackExchange join the blackout? – see this answer: the content is owned by the community, so the entire community would have had to agree on the blackout.

Have a look at this video by Fight for the Future or this awesome TED talk for some further info.

QotW #11: Is it possible to have a key for encryption, that cannot be used for decryption?

This week’s question of the week was asked by George Bailey, who wanted to know if it were possible to have a key for encryption that could not be used for decryption. This seems at first sight like a simple question, but underneath it there are some cryptographic truths that are interesting to look at.

Firstly, as our first answerer SteveS pointed out, the process of encrypting data according to this model is asymmetric encryption. Steve provided links to several other answers we have. First up from this list was asymmetric vs symmetric encryption. From our answers there, public key cryptography requires two keys, one that can only encrypt material and another which can decrypt material. As was observed in several answers, when compared to straightforward symmetric encryption, the requirement for the public key in public key cryptography creates a large additional burden that depends heavily on careful mathematics, while symmetric key encryption really relies on the confusion and diffusion principle outlined in Shannon’s 1949 Communication Theory of Secrecy Systems. I’ll cover some other points raised in answers later on.

A similarly excellent source of information is what are private and public key cryptography and where are they useful?

So that answered the “is it possible to have such a system” question; the next step is how. This question was asked on the SE network’s Crypto site – how does asymmetric encryption work?. In brief, in the most commonly used asymmetric encryption algorithm (RSA), the core element is a trapdoor function or permutation – a process that is relatively trivial to perform in one direction, but difficult (ideally, impossible, but we’ll discuss that in a minute) to perform in reverse, except for those who own some “insider information” — knowledge of the private key being that information. For this to work, the “insider information” must not be guessable from the outside.

This leads directly into interesting territory on our original question. The next linked answer was what is the mathematical model behind the security claims of symmetric ciphers and hash algorithms. Our accepted answer there by D.W. tells you everything you need to know – essentially, there isn’t one. We only believe these functions are secure based on the fact no vulnerability has yet been found.

The problem then becomes: are asymmetric algorithms “secure”? Let’s take RSA as example. RSA uses a trapdoor permutation, which is raising values to some exponent (e.g. 3) modulo a big non-prime integer (the modulus). Anybody can do that (well, with a computer at least). However, the reverse operation (extracting a cube root) appears to be very hard, except if you know the factorization of the modulus, in which case it becomes easy (again, using a computer). We have no actual proof that factoring the modulus is required to compute a cube root; but more of 30 years of research have failed to come up with a better way. And we have no actual proof either that integer factorization is inherently hard; but that specific problem has been studied for, at least, 2500 years, so easy integer factorization is certainly not obvious. Right now, the best known factorization algorithm is General Number Field Sieve and its cost becomes prohibitive when the modulus grows (current World record is for a 768-bit modulus). So it seems that RSA is secure (with a long enough modulus): breaking it would require to outsmart the best mathematicians in the field. Yet it is conceivable that a new mathematical advance may occur any day, leading to an easy (or at least easier) factorization algorithm. The basis for the security claim remains the same: smart people spent time thinking about it, and found no weakness.

Cryptography offers very few algorithms with mathematically proven security (e.g. One-Time Pad), let alone practical algorithms with mathematically proven security; none of them is an asymmetric encryption algorithm. There is no proof that asymmetric encryption can really exist. But there is no proof that hash functions exist, either, and it never prevented anybody from using hash functions.

Blog promotion afficionado Jeff Ferland provided some extra detail in his answer. Specifically, Jeff addressed which cipher setup should be used for actually encrypting the data, noting that the best setup for most real world scenarios is the combined use of asymmetric and symmetric cryptography as occurs in PGP, for example, where a transfer key encrypts the data using symmetric encryption and that key, a much smaller piece of data, can be effectively be protected by asymmetric encryption; this is often called “hybrid encryption”. The reason asymmetric encryption is not used throughout, aside from speed, is the padding requirement as Jeff himself and this question over on Crypto.SE discusses.

So in conclusion, it is definitely possible to have a key that works only for encryption and not for decryption; it requires mathematical structure, and faith in the difficulty of inverting some of these operations. However, using asymmetric encryption correctly and effectively is one of the biggest challenges in the security field; beyond the maths, private key storage, public key distribution, and key usage without leaking confidential information through careless implementation are very difficult to get right.

Some More BSidesLV and DEFCON

Internet connectivity issues kept me from being timely in updating, and a need for sleep upon my return led me to soak up all of the rest of BSides and DEFCON. That means just a few talks are going to be brought up.

First, there’s Moxie Marlinspike‘s talk about SSL. In my last post, I had mentioned that I thought SSL has reached the point where it is due to be replaced. In the time between writing that and seeing his talk, I talked with a few other security folk. We all agreed that DNSSEC made for a better distribution model than the current SSL system, and wondered before seeing Moxie’s presentation why he would add so much more complexity beyond that. The guy next to me (I’ll skip name drops, but he’s got a security.stackexchange.com shirt now and was all over the news in the last year) and I talked before his presentation, and at the end agreed that Moxie’s point about trusting the DNS registry and operators to not change keys could be a mistake.

Thus, we’re still in the world of certificates and complicated x.509 parsing that has a lot of loopholes, and we’ve moved added something that the user needs to be aware of. However, we have one solid bonus: many independent and distributed sources must now collaborate to verify a secure connection. If one of them squawks, you at least have an opportunity to be aware. An equally large entry exists in the negative column: it is likely that many security professionals themselves won’t enjoy the added complexity. There’s still a lot of research work to be done, however the discussion is needed now.

PCI came up in discussion a little bit last year, and a lot more this year. In relation, the upcoming Penetration Testing Execution Standard was discussed. Charlie Vedaa gave a talk at BSides titled “Fuck the Penetration Testing Execution Standard”. It was a frank and open talk with a quick vote at the end: the room as a whole felt that despite the downsides we see structures like PCI and the PTES, we were better off with them than without.

The line for DEFCON badges took most people hours and the conference was out of the hard badges in the first day. Organizers say it wasn’t an issue of under-ordering, but rather that they had exhausted the entire commercial supply of “commercially pure” titanium to make the badges. Then the madness started…

AT&T’s network had its back broken under the strain of DEFCON resulting in tethering being useless and text messages showing up in batches sometimes more than 30 minutes late. The Rio lost its ability to check people into their hotel rooms or process credit cards. Some power issues affected the neighboring hotel at a minimum — Gold Coast had a respectable chunk of casino floor and restaurant space in the dark last evening. The audio system for the Rio’s conference area was apparently taken control of and the technicians locked out of their own system. Rumors of MITM cellular attacks at the conference, and now days later in the press abound. Given talks last year including a demonstration and talks this week at the Chaos Computer Camp, the rumors are credible. We’ll wait to see evidence, though.

DEFCON this year likely had more than 15,000 attendees, and they hit the hotel with an unexpected force. Restaurants were running out of food. Talks were sometimes packed beyond capacity. The Penn and Teller theater was completely filled for at least three talks I had interest in, locking me outside for one of them. The DEFCON WiFi network (the “most hostile in the world”) suffered some odd connectivity issues and a slow-or-dead DHCP server.

Besides a few articles in the press that have provided interesting public opinion, one enterprising person asked a few random non-attendees at the hotel what they thought of the event. The results are… enlightening.

Could Apple bring secure email to the masses with iOS 5

One of my worst ever projects was implementing PGP email encryption. Considering I have only worked in large financial services companies, that is saying something. I have reflected on the lessons learnt from this project before, but I felt that PGP was fundamentally flawed. When Apple iOS 5 was unveiled, there was a small feature that no one talked about. It was hidden among the sparkling jewels of notifications, free messaging and just works synchronization. That feature was S/MIME email encryption support. Now S/MIME is not new, however Apple is uniquely positioned with their ecosystem and user-centric design to solve the fundamental problems and bring secure email to the masses.

I have detailed the problems that email encryption solves, and those it does not solve before. To re-iterate and update:

Attacker eavesdropping or modifying an email in transit. both on public networks such the Internet and unprotected wireless networks as well as private internal networks. Recently I have experienced things that have hammered home the reality of this threat: Heartfelt presentations at security conferences such as Uncon about the difficulties faced by those in oppressive regimes such as Iran, where the government reading your email could result in death or worse for you and your family. Even “liberal” governments such as the US blatantly ignoring the law to perform mass domestic wire tapping in the name of freedom. The right to privacy is a human right. Most critical communication these days is electronic. There is a clear problem to be solved in keeping this communication only to it’s intended participants.

Attacker reading or modifying emails in storage. The recent attacks on high profile targets such as Sony and low profile like MTGox, who were brought low by simple exploitation of unpatched systems and SQL injection vulnerabilities, have revealed an un-intended consequence. The email addresses and passwords published were often enough to allow attackers access to a users email account as the passwords were re-used. I don’t know about you but these days a lot of my most important information is stored in my gmail account. The awesome search capability, high storage capacity and accessibility everywhere means that it is a natural candidate for scanned documents and notes as well as the traditional highly personal and private communications. There is a problem to be solved of adding a layered defence; an additional wall in your castle if the front gate is breached.

Attacker is able to send emails impersonating you. It is amazing how much email is trusted today as being actually from the stated sender. In reality normal email provides very little non-repudiation. This means, it is trivial to send an email that appears to come from someone else. This is a major reason why phishing and spear phishing in particular are still so successful. Now spam blockers, especially good ones like Postini have become very good at examining email headers, verified sender domains, MX records etc to reject fraudulent emails (which is effective unless of course you are an RSA employee and dig it out of your quarantine). You can also have more localised proof of concept of this. If your work accepts manager e-mail approvals for things like pay rises and software access, and it also has open SMTP relays you can have some fun by Googling SMTP telnet commands. There is a problem to solve in how to reliably verify the sender of the email and ensure the contents have not been tampered with.

Problems not solved by email encryption and signing:

- Sending the wrong contents to the right person (s)

- Sending the right contents to the wrong person (s)

- Sending the wrong contents to the wrong person (s)

These are important to keep in mind because you need to choose the right tool for the job. Email encryption is not a panacea for all email mis-use cases.

So having established that there is a need for email encryption and signing, what are the fundemental problems with a market leading technology like PGP (now owned by Symantec)?

Fundemental problems in summary:

Key exchange. Bob Greenpeace wants to send an email to Alice Activist for the first time. He is worried about Evil Government and Greedy OilCorp reading it and killing them both. Bob needs Alice’s public key to be able to encrypt the document. If Bob and Alice both worked at the same company and had PGP universal configured correctly, or worked in organisations that both had PGP universal servers exposed externally, or had the foresight to publish their public keys to the public PGP global key server, all would be well. However this is rarely the case. Also email is a conversation. Alice not only wants to reply but also add in Karl Boatdriver in planning the operation. Now suddenly all three need his public keys and he theirs. Bob, Alice and Karl are all experts in their field but not technical and have no idea how to export and send their public keys and install these keys before sending secure email. They have an operation to plan for Thursday!

Working transparently and reliably everywhere. Bob is also running Outlook on his Windows phone 7 (chuckles), Alice Pegasus on Ubuntu and Karl Gmail via Safari on his iPad. Email encryption, decryption, signing, signature verification, key exchange all need to just work on all of these and more. It also cannot be like PGP desktop which uses a dirty hack to hook into a rich email client. This gives it extremely good reliability and performance. Intermittent issues like emails going missing and blank emails never occur. Calls to PGP support for any enterprise installation are few and far between. All users in companies love PGP and barely notice it is there. In many organisations email is the highest tier application. It has to have three nines uptime. If Alice and Karl are about to be captured by Greedy Oilcorp and they need to get an email to Bob they cannot afford to get a message blocked by PGP error.

Less fundamental but still annoying:

- Adding storage encryption – there is no simple way with PGP to encrypt a clear text email in storage. You can send it to yourself again or export it to a file system and encrypt it there but that is it. This is a problem in where the email is not sensitive enough to encrypt in transit (or just where you just forgot) but where after the fact, you do care if script kiddies with your email password get that email and publish it on Pastebin.

How Apple could solve these fundamental problems with iOS 5

Key exchange – If you read marknca‘s excellent iOS security guide, you will see that Apple has effectively built a Public Key Infrastructure (PKI). Cryptographically signing things like apps and checking this signature before allowing them to run is key to their security model. This could be extended to users to provide email encryption and specifically transparent key exchange in the following manner:

- Each user with an Apple ID would have a public/private key pair automatically generated

- The public keys would be stored on the Apple servers and available via API and on the web to anyone

- The private keys would be encrypted to the the users Apple account passphrase and be synchronised via iCloud to all iOS and Mac endpoints

- To send a secure email the user would just click a secure button on their email tool

- When sending an email via an iOS device, a Mac or via Mobile me web it would query the Apple servers for the recipients private key and encrypt and sign (optional) the email

- On receipt as long as the device had been unlocked the email would be decrypted. For web access as long as the certificate was stored in the browser the email would be decrypted (could be an addon to Safari)

- To encrypt an email in storage you would just drag it to a folder called private email. You could write rules for what emails were automatically stored there.

This system would make key exchange, email encryption, decryption and signing totally transparent. Because S/MIME uses certificates it is easy to get the works everywhere property. However Apple would first enable this only for .me addresses and iOS devices and Mac’s to enable them to sell more of these and to beef up their enterprise cred. Smart hackers and addon writers would soon make it work everywhere though. There you go, secure email for the masses.

BSidesLV 2011, Day 1

This week in Las Vegas is Christmas for security. In listening to four BSidesLV talks today, I’ve come to conclude that the community suffers from a real lack of discussion about interacting with management, mandatory access controls need to be enhanced to focus on applications, the SSL system is irreparably broken and DNSSEC really should replace it, and some potential laws related to hacking may be harbingers of a 100 year security dark age.

That’s a loaded paragraph, so here’s the breakdown: Adam Ely’s talk “Exploiting Management for Fun and Profit – or – Management is not Stupid, You Are” made a fantastic point about budgeting for security. Getting better security isn’t about convincing executives that they need better security. Better security is about understanding what the corporate goals are and fitting the application to that model. Consider an executive’s primary goal of a hospital: increase the survival rate of emergency room patients. How can your goals for security further that goal?

Val Smith’s “Are There Still Wolves Among Us” expressed research showing a very skilled black hat community that has a quiet history of program modification at vendors, years-old 0-day exploits and wholesale compromise of security researchers. The summary point is that “cyber warfare” and “government-level” threats may come from non-government hackers, and they’re the quiet ones. LulzSec, Anonymous and the like are providing covering noise for the ones who don’t get caught. It is further a possibility that attacks that appear to be from foreign countries may be intentional proxying by talented hackers

“A Study of What Breaks SSL” by Ivan Ristic conveyed that the majority of servers are misconfigured somehow. Acceptance of data and sometimes the presentation of login forms in unencrypted pages, broken certificate chains, and servers still offering up SSLv2 in abundance. I’ve personally come to believe that the purpose of SSL — provide assurance that an encryption key belongs to the registered domain of the certificate — has been supplanted by the implementation of DNSSEC. As DNSSEC provides for a similar signature chain and distribution of keys, it ought to be used as the in-channel distribution method. Further to that, the bolt-on nature of SSL permits numerous attacks and misconfiguration possibilities that can prevent even negotiating SSL with a client. Those thoughts may be worthy of their own paper

Finally, Schuyler Towne’s “Vulnerability Research Circa 1851” was a great look at the security culture of physical locks. It showed the evolution of lock security as it moved toward a system where knowing the mechanical construction of a lock didn’t prevent it from being secure. More importantly, it showed a 100 year drought of lock security filled with closed and legally enforced locksmith guilds, laws against lockpicking and the stalling of progress in adopted residential security locks — namely that most American household locks are using 100 year old technology. It emphasized the potential disaster that adoption of laws such as Germany’s 202© “anti-hacking tools” law could present the security industry with. Just as the golden age of lock development was spurred on with constant public challenges over lock security and then followed up with a century-long dark age here laws and culture prevented research that would advance security .

The first day of BSides has drawn to a close, the 2nd day is opening. The lines for badges at DEFCON are some kind of absurd, and the week is just warming up. DEFCON organizers (“Goons”) are expecting 12,000 attendees. Why they have only pressed 9200 attendee badges is a notable question given the badge shortages of previous years, though. Security companies are actively and openly conference recruiting attendees from BSides, and I expect more of the same at DEFCON.

Security Stack Exchange graduated today!

After 242 days in Beta, we now have over 3000 users and an active community of security professionals, hobbyists and specialists providing input, answers, moderation, blog posts and their own time to make the site a global success.

Congratulations to all the members – your effort has paid off, and today we joined 27 other official sites in the Stack Exchange network, and graduate as a fully fledged member. We’re excited to see the new visual design by @Jin (with comments and ideas from many of our core contributors) that permeates all aspects of the new site and blog. Various people see various things in it – the noble and powerful lion (Aslan?) on the great shield of security, wings for swiftness and protection, the various flanking maneuvers and battles raging in the background. As per the other StackExchange sites, you will even be able to get t-shirts and other logoware soon.

What does graduation mean?

A design, official inclusion into StackExchange – statistics, API tools etc. A greater presence online.

Reputation and Privileges

Private and public beta sites operate under reduced reputation requirements. This allows young sites to grow rapidly. However, when the site graduates from beta, the privilege levels return to their normal levels.

| Private Beta | Public Beta | Graduated | |

| 1 | 15 | 15 | Vote Up |

| 15 | 15 | 15 | Flag Offensive |

| 1 | 50 | 50 | Leave Comments |

| 1 | 100 | 100 | Edit Wiki Posts |

| 1 | 125 | 125 | Vote Down |

| 1 | 150 | 150 | Create New Tags |

| 1 | 200 | 200 | Retag Questions |

| 500 | 750 | 2000 | Edit Posts |

| 1 | 500 | 3000 | Vote to Close |

| 2000 | 2000 | 10000 | Access Mod Tools |

This means 19 of you have lost ‘Edit Posts’ privileges until you get over 2000, and 51 have lost ‘Vote to Close’ until you reach 3000. Don’t worry – you can always flag issues and a mod will take care of it. Once you reach the normal thresholds your privileges will automatically return.

But what is the IT Security Stack Exchange for?

From the FAQ:

IT Security – Stack Exchange is for Information Security professionals to discuss protecting assets from threats and vulnerabilities. Topics include, but are not limited to:

- web app hardening

- network security

- phishing

- risk management

- policies

- penetration testing

- security tools

- using cryptography*

Celebrate success

Let your colleagues know about the site and the blog – we already get around 1000 visits a day, but the more people who come, the wider the pool of expertise we can bring in.

We also have a twitter hashtag – #stacksecurity – so feel free to communicate to the twitterverse to let people know that answers to a lot of security questions are here.

*Also, we have just heard that a closely related site, the Cryptography Stack Exchange, has just reached 100% commit so will be entering private Beta now. While Security Stack Exchange will continue to have as one of our disciplines the understanding and management of risk in crypto implementations, here we steer clear of the mathematical issues and concentrate on security and risk.

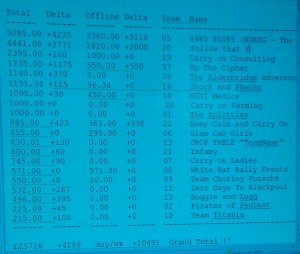

Security Stack Exchange Sponsored team wins UK’s White Hat Rally

White Hat Events is a collection of individuals from the UK’s Information Security Industry who get together to raise money for charity. The events each year include the White Hat Ball, Marathon, Golf, Cocktail Party and Rally.

The 2011 Carry-On themed White Hat Rally was fiercely fought over the weekend of 1 – 3 July, with teams from security consultancies, vendors, suppliers, and independent contractors all over the UK taking part, and raising money for and the NSPCC’s Childline, with a total raised by Sunday topping £25,000. Across the sunniest 3 days this summer we travelled from Brighton to Blackpool, following clues, competing in challenges, suffering japes, sabotage and mechanical issues, and enjoying the hospitality of towns along the way, as well as getting to know a like-minded bunch of security professionals all trying to make a difference. I joined the Northern UK Security Group (NUKSG) team in Leeds on Thursday, and we drove the Yellow Peril (an ancient Dodge Caravan bought for £350, bright yellow with an interior entirely covered in red velour) down to Brighton, where we met the other teams for a pleasant social…quite late on, due to starter motor issues, traffic, and the Yellow Peril’s lack of a top speed (among other issues)

Day one – we met up at Brighton beach, a motley collection of classic cars, sports cars, agricultural and emergency vehicles and bangers. The day involved a lovely journey across the South Downs, following clues and ending up in Cheltenham. Each team had GPS tracking apps to allow the organisers and families to see how we were doing.

At our first checkpoint stop the Pirates O’ Pentest opened up the back of their ambulance to display a fully featured and functional cocktail bar – which went down very well at each stop for the next 3 days – raising extra money for charity.

Lunch was hosted at Brooklands Museum, the birthplace of British motorsport and aviation, and included a speech by Diana Moran (the Green Goddess), who also led us in some mild aerobics, despite being in her 70’s. I was delighted to sit on the famous banking I had heard about since my early childhood, poke around the classic cars and aircraft and play on the F1 simulator.

Due to a minor organisational hiccup, The StoryTeller restaurant in Cheltenham were not made aware of the party of 67 until a couple of hours before we arrived, but they coped amazingly well – getting us all seated and providing a lovely dinner. The Scavenger Hunt in Cheltenham attracted a few entrants, but we didn’t find out the results until Sunday night.

Day two – saw us winding through the countryside up to the oldest brewery in the UK, the Three Tuns in Shropshire, for lunch, a tour of the brewery and tasting of some new brews.

We also met the lovely Clare Marie – the hostess of Dr Sketchy’s London art events. The afternoon drive then led us up to Buxton and the Palace Hotel for our evening stop. Once again we were provided with an excellent dinner, this time at the Railway, and a Carry On quiz.

Day three – a relatively short run, with some straightforward clues that got us to Blackpool, and the Big Blue hotel – which is where we were finally joined by 2 of our number we hadn’t seen for the entire event…because they cycled the entire way!! Fancy dresses were out in force, and everyone had a great time on the rollercoasters and rides before dinner (can’t believe I stayed on the Big One for 3 laps – it’s 235 feet high, one of Europ’s highest roller coasters and I’m terrified of heights!) and prizegiving at the White Tower.

Team NUKSG did not win best dressed car, best fancy dress, or prize for quiz or scavenger hunt, however we did raise the most money so we were the overall winners and took home the star prize – a bottle of the Three Tun’s Cleric’s Cure each!

We are obviously keen to keep raising money so please visit our sponsorship page.

The official picture page is here at Picasa – with folders of photos from each car, as well as the Marshalls.

We are obviously keen to keep raising money so please visit our sponsorship page.

The official picture page is here at Picasa – with folders of photos from each car, as well as the Marshalls.

Many thanks again to my sponsors:

Security Stackexchange – Robert and the team provided us with sponsorship and we grabbed a couple of Stackexchange logos to stick on the car, one on each side. This went down well with the technical security folks we were competing with.

Virgin Money – Virgin’s banking department, and the providers of Virgin Money Giving – the only not-for-profit charity payments site.

Metaltech – my Rock band, preparing for new album launch party in August (@metltek and #burnyourplanet on Twitter)