Configuration

QoTW #50: Does password protecting the BIOS help in securing sensitive data

Camil Staps asked this question back in April 2013, as although it is generally accepted that using a BIOS password is good practice, he couldn’t see what protection this would provide, given, in his words, “…there aren’t really interesting settings in the BIOS, are there?”

While Camil reckoned that the BIOS only holds things like date and time, and enabling drives etc., the answers and comments point out some of the risks, both in relying on a BIOS password and in thinking the BIOS is not important!

The accepted answer from Iszi covers off why the BIOS should be protected:

…an attacker to bypass access restrictions you have in place on any non-encrypted data on your drives. With this, they can:

- Read any data stored unencrypted on the drive.

- Run cracking tools against local user credentials, or download the authenticator data for offline cracking.

- Edit the Registry or password files to gain access within the native OS.

- Upload malicious files and configure the system to run them on next boot-up.

And what you should do as a matter of course:

That said, a lot of the recommendations in my post here (and other answers in that, and linked, threads) are still worth considering.

- Encrypt the hard drive

- Make sure the computer is physically secure (e.g.: locked room/cabinet/chassis)

- Use strong passwords for encryption & BIOS

Password-protecting the BIOS is not entirely an effort in futility.

Thomas Pornin covers off a possible reason for changing the date:

…by making the machine believe it is in the far past, the attacker may trigger some other behaviour which could impact security. For instance, if the OS-level logon uses smart cards with certificates, then the OS will verify that the certificate has not been revoked. If the attacker got to steal a smart card with its PIN code, but the theft was discovered and the certificate was revoked, then the attacker may want to alter the date so that the machine believes that the certificate is not yet revoked.

But all the answers agree that all a BIOS password does on its own is protect the BIOS settings – all of which can be bypassed by an attacker who has physical access to the machine, so as per Piskvor‘s answer:

you need to set up some sort of disk encryption (so that the data is only accessible when your system is running)

Like this question of the week? Interested in reading more detail, and other answers? See the question in full. Have questions of a security nature of your own? Security expert and want to help others? Come and join us at security.stackexchange.com.

Stump the Chump with Auditd 01

ServerFault user ewwhite describes a rather interesting situation regarding application distribution wherein code must be compiled in production. In short he wants to keep track of changes to a specific directory path and send alerts via email.

Let’s assume that there already exists some basic form of auditd in play, so as such we’ll be building out a snippet to be inserted into your existing /etc/audit/audit.rules. Ed was sparse on some of the specifics related to the application, understandably so, so let’s make some additional assumptions. Let’s assume that the source code directory in question is “/opt/application/src” and that all binaries are installed into “/opt/application/bin“.

-w /opt/application/src -p w -k app-policy -w /opt/application/bin -p wa -k app-policyI’ve decided to process each directory, source and binaries, separately. The commonality between the two are the ‘-w’ and the ‘-k’ options. The ‘-w’ option says to watch that directory recursively. The ‘-k’ option says to add the text “app-policy” to the output log message, this is just to make log reviews easier. The ‘-p’ option is actually where the magic happens, and is the real reason to separate these two rules out.

As we discussed in the previous post, nearly 8 months ago, the option ‘-p w’ instructs the kernel to log on file writes. One would assume this is accomplished by attaching to the POSIX system call write. That syscall, however, gets called quite a lot when files are actually saved. So as to not overwhelm the logging system auditd instead attaches to the open system call. By using the (w)rite argument we look for any instance of open that uses the O_WRONLY or O_RDWR flags. It’s worth noting that this does not mean a file was actually modified, only that it was opened in such a way that would allow for modification. For example, if a user opened “/opt/application/src/app.h” in a text editor a log would be generated, however if it was written to the terminal using cat or read using less then no log would be generated. This is pretty important to remember as many people will read a file using a text editor and simply exit without saving changes (hopefully).

We also want to watch for file writes in the binary directory except here we would expect them to be more reliable. It would be rather unusual, but not out of the question, for someone to attempt to use a text editor to open an executable. In addition we added the (a)ttribute option. This will alert us if any of the ownership or access permissions change, most importantly if a file is changed to be executable or the ownership is changed. This will not catch SELinux context changes but since SELinux uses the auditd logging engine then those changes will show up in the same log file.

Now that we have the rules constructed we can move on to the alerting. Ed wanted the events to be emailed out. This is actually quite a bit more complicated. By default auditd uses it’s own built in logger. This does make some sense when you consider the type of logging this system is intended to perform. By not relying on an external logger, like syslogd or rsyslog, it can better suffer mistaken configurations. On the downside it makes alternate logging setups trickier. There does exist a subsystem called ‘audispd’ that acts as a log multiplexor. There are a number of output plugins available, such as syslog, UNIX socket, prelude IDS, etc. None of them really do what Ed wants, so I think our best bet would run reports. Auditd is, after all, an auditing tool and not an enforcement tool. So let’s look at something a little different.

Remember how we tacked on ‘-k app-policy‘ to those rules above? Now we get to the why. Let’s try running the command:

aureport -k -ts yesterday 00:00:00 -te yesterday 23:59:59We should now see a list of all of the logs that contain keys and occurred yesterday. Let’s look at a concrete example of me editing a file in that directory and the subsequent logs.

root@ node1:~> mkdir -p /opt/application/src root@ node1:~> vim /opt/application/src/app.h root@ node1:~> aureport -kThe report tells us that at 11:41:29 on September the 24th a user ran the command “/usr/bin/vim” and triggered a rule labeled app-policy. It’s all good so far, but not very detailed. The last two fields, however, are quite useful. The first, 1000, is the UID of my personal account. That is important because notice I was actually running as root. Since I had originally used “sudo -i to gain a root shell my original UID was still preserved, this is good! The last field is a unique event ID generated by auditd. Let’s look at that first event, numbered 13446.

Key Report \=============================================== # date time key success exe auid event \=============================================== 1. 09/24/2013 11:41:29 app-policy yes /usr/bin/vim 1000 13446 2. 09/24/2013 11:41:29 app-policy yes /usr/bin/vim 1000 13445 3. 09/24/2013 11:41:29 app-policy yes /usr/bin/vim 1000 13447 4. 09/24/2013 11:41:29 app-policy yes /usr/bin/vim 1000 13448 5. 09/24/2013 11:41:29 app-policy yes /usr/bin/vim 1000 13449 6. 09/24/2013 11:41:35 app-policy yes /usr/bin/vim 1000 13451 7. 09/24/2013 11:41:35 app-policy yes /usr/bin/vim 1000 13450

root@ node1:~> grep :13446 /var/log/audit/audit.log type=SYSCALL msg=audit(1380037289.364:13446): arch=c000003e syscall=2 success=yes exit=4 a0=bffa20 a1=c2 a2=180 a3=0 items=2 ppid=21950 pid=22277 auid=1000 uid=0 gid=0 euid=0 suid=0 fsuid=0 egid=0 sgid=0 fsgid=0 ses=1226 tty=pts0 comm="vim" exe="/usr/bin/vim" subj=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023 key="app-policy" type=CWD msg=audit(1380037289.364:13446): cwd="/root" type=PATH msg=audit(1380037289.364:13446): item=0 name="/opt/application/src/" inode=2747242 dev=fd:01 mode=040755 ouid=0 ogid=0 rdev=00:00 obj=unconfined_u:object_r:usr_t:s0 type=PATH msg=audit(1380037289.364:13446): item=1 name="/opt/application/src/.app.h.swx" inode=2747244 dev=fd:01 mode=0100600 ouid=0 ogid=0 rdev=00:00 obj=unconfined_u:object_r:usr_t:s0This is what we mean when we say audit logs are verbose. In the introductory blog post we discussed some of those fields so I’ll save us the pain of going over it again. What we can see, however, is that the user with uid 1000 (see auid=1000) ran the command vim as root (see euid=0) and that command resulted in a change to both “/opt/application/src/” and “/opt/application/src/.app.h.swx“.

What we should be able to see here is that the report generated by aureport doesn’t contain everything we need to see what happened, but it does tell us something happened and gives us the information necessary to find the information. In an ideal world you would have some kind of log aggregation system, like Splunk or a SIEM, and send the raw logs there. That system would then have all the alerting functionality built in to alert an admin to to the potential policy violation. However, we don’t live in a perfect world and Ed’s request for email alerts indicate he doesn’t have access to such a system. What I would do is set up a daily cron job to run that report for the previous day. Every morning the log reviewer can check their mailbox and see if any of those files changed when they weren’t supposed to. If daily isn’t reactive enough then we can simply change the values passed to ‘-ts’ and ‘-te’ and run the job more frequently.

Pulling it all together we should have something that looks like this.

#/etc/audit/audit.rules # This file contains the auditctl rules that are loaded # whenever the audit daemon is started via the initscripts. # The rules are simply the parameters that would be passed # to auditctl.

# First rule - delete all -D

# Increase the buffers to survive stress events. # Make this bigger for busy systems -b 320

# Feel free to add below this line. See auditctl man page -w /opt/application/src -p w -k app-policy -w /opt/application/bin -p wa -k app-policy #/etc/cron.d/audit-report MAILTO=ewwhite@example.com

1 0 * * * root /sbin/aureport -k -ts yesterday 00:00:00 -te yesterday 23:59:59

About the recent DNS Amplification Attack against Spamhaus: Countermeasures and Mitigation

A few weeks ago the anti-spam provider Spamhaus was hit by one of the biggest denial of service attacks ever seen, producing over 300 gbit in traffic. The technique used to generate most of the traffic was DNS Amplification, a technique which doesn’t require thousands of infected hosts, but exploits misconfigured DNS servers and a serious design flaw in DNS. We will discuss how this works, what it abuses and how Spamhaus was capable of mitigating the attack.

QoTW #44: How to block or detect user setting up their own personal wifi AP in our LAN?

Nominated by Terry Chia, this question by User15580 should be of interest to anyone managing the security of network s.

The show the variety of aspects security covers in this sort of scenario:

Daniel posted the top answer, and it has nothing to do with IT, but instead focuses on the cause – if a user has installed an access point it is because they need something the existing network is not providing. This is always worth considering:

Discuss with the users what they are trying to accomplish. Perhaps create an official wifi network ( use all the security methods you wish – it will be ‘yours’ ). Or, better, two – Guest and Corporate WAPs.

Polynomial and Thomas Pornin also highlighted the fact this is a user/managerial problem, rather than a technical one.

Remember Immutable Law of Security #10: Technology is not a panacea. Whilst technology can do some amazing things, it can’t enforce user behaviour. You have a user that is bringing undue risk to the organisation, and that risk needs to be dealt with. The solution to your problem is _policy_, not technology. Set up a security policy that details explicitly disallowed behaviours, and have your users sign it. If they violate that policy, you can go to your superiors with evidence of the violation and a penalty can be enforced. As long as the users have physical access to the machines they use and their USB ports (that’s hard to avoid, unless you pour glue in all the USB ports…) and that the installed operating systems allow it (then again, hard to avoid if users are “administrators” on their systems, in particular in BYOD contexts), then the users can setup custom access points which gives access to, at least, their machine.

Rory McCune provided some information on the types of solutions which generally are used in large corporates, where they work well, including NAC and port lockdowns. Lie Ryan‘s comments tend to be appropriate on smaller networks.

k1DBLITZ also focuses on the use of technical solutions in addition to policy, and JasperWallace recommends looking for and blocking unapproved MAC addresses, and further answers discuss wireless scanning and scripted checks.

Overall, it would seem that a mixture of technical and management controls are required – the balance depending on your specific environment.

Liked this question of the week? Interested in reading it or adding an answer? See the question in full. Have questions of a security nature of your own? Security expert and want to help others? Come and join us at security.stackexchange.com.

QoTW #42: Would publishing a network diagram make the network less secure?

I chose this week’s Question of the Week, saber tabatabaee yazdi‘s “Would publishing a network diagram make the network less secure?” because this is a point which seems to be often misunderstood.

Saber asked this question because he had come across various websites designed to let people share their network diagrams and designs in order that others can comment on them and provide guidance and he wondered what the risks would be from this.

As an example, this diagram from www.ratemynetworkdiagram.com provides IP addresses, host names and even descriptions:

AJ Henderson provided the very valid comment that security through obscurity is not security, but admits that any network will have some weaknesses, and avoiding giving this information to a potential attacker is probably advised.

My answer is taken from the experience of managing many hundreds of penetration tests. My take on it is:

having a map helps me target my attack, avoiding possible sensors, honeypots etc and aiming at high value targets or sources of information. This can speed up an attack immensely, reducing the defender’s chance of preventing it.

But the value from these sites is that you can have obvious mistakes pointed out to you – peer review can be a very valuable thing. So how can you do that safely?

To reduce risk, some steps you can take are:

- remove addresses, function titles etc

- only include sections of the network

- post under an anonymous profile

- include fake network sections

An attacker will still get information, but it hopefully won’t be enough to let them navigate your entire network.

Liked this question of the week? Interested in reading it or adding an answer? See the question in full. Have questions of a security nature of your own? Security expert and want to help others? Come and join us at security.stackexchange.com.

A Brief Introduction to auditd

The auditd subsystem is an access monitoring and accounting for Linux developed and maintained by RedHat. It was designed to integrate pretty tightly with the kernel and watch for interesting system calls. Additionally, likely because of this level of integration and detailed logging, it is used as the logger for SELinux.

All in all, it is a pretty fantastic tool for monitoring what’s happening on your system. Since it operates at the kernel level this gives us a hook into any system operation we want. We have the option to write a log any time a particular system call happens, whether that be unlink or getpid. We can monitor access to any file, all network traffic, really anything we want. The level of detail is pretty phenomenal and, since it operates at such a low level, the granularity of information is incredibly useful.

The biggest downfall is actually a result of the design that makes it so handy. This is itself a logging system and as a result does not use syslog. The good thing here is that it doesn’t have to rely on anything external to operate, so a typo in your (syslog|rsyslog|syslog-ng).conf file won’t result in losing your system audit logs. As a result you’ll have to manage all the audit logging using the auditd suite of tools. This means any kind of log collection, organization, or archiving may not work with these files, including remote logging. As an aside, auditd does have provisions for remote logging, however they are not as trivial as we’ve come to expect from syslog.

Thanks to the level of integration that it provides your auditd configurations can be quite complex, but I’ve found that there are primarily only two options you need to know.

- -a exit,always -S <syscall>

- -w <filename>

The first of these generates a log whenever the listed syslog exits, and whenever the listed file is modified. Seems pretty easy right? It certainly can be, but it does require some investigation into what system calls interest you, particularly if you’re not familiar with OS programming or POSIX. Fortunately for us there are some standards that give us some guidance on what to look out for. Let’s take, for example, the Center for Internet Security Red Hat Enterprise Linux 6 Benchmark. The relevant section is “5.2 Configure System Account (auditd)” starting on page 99. There is a large number of interesting examples listed, but for our purposes we’ll whittle those down to a more minimal and assume your /etc/audit/audit.rules looks like this.

# This file contains the auditctl rules that are loaded # whenever the audit daemon is started via the initscripts. # The rules are simply the parameters that would be passed # to auditctl. # First rule - delete all -DBased on our earlier discussion we should be able to see that we generate a log message every time any of the following system calls exit: adjtimex, settimeofday, stime, clock_settime, sethostname, setdomainname. This will let us know whenever the time gets changed or if the host or domain name of the system get changed.

# Increase the buffers to survive stress events. # Make this bigger for busy systems -b 1024 -a always,exit -S adjtimex -S settimeofday -S stime -k time-change -a always,exit -S clock_settime -k time-change -a always,exit -S sethostname -S setdomainname -k system-locale -w /etc/group -p wa -k identity -w /etc/passwd -p wa -k identity -w /etc/shadow -p wa -k identity -w /etc/sudoers -p wa -k identity -w /var/run/utmp -p wa -k session -w /var/log/wtmp -p wa -k session -w /var/log/btmp -p wa -k session -w /etc/selinux/ -p wa -k MAC-policy # Disable adding any additional rules - note that adding new rules will require a reboot -e 2

We’re also watching a few files. The first four (group, passwd, shadow, sudo) will let us know whenever users get added, modified, or privileges changed. The next three files (utmp, wtmp, btmp) store the current login state of each user, login/logout history, and failed login attempts respectively. So monitoring these will let us know any time an account is used, or failed login attempt, or more specifically whenever these files get changed which will include malicious covering of tracks. Lastly, we’re watching the directory ‘/etc/selinux/’. Directories are a special case in that this will cause the system to recursively monitor the files in that directory. There is a special caveat that you cannot watch ‘/’.

When watching files we also added the option ‘-p wa’. This tells auditd to only watch for (w)rites or (a)ttribute changes. It should be noted that for write (and read for that matter) we aren’t actually logging on those system calls. Instead we’re logging on ‘open’ if the appropriate flags are set.

It should also be said that the logs are also rather…complete. As an example I added the system call rule for sethostname to a Fedora 17 system, with audit version 2.2.1. This is the resultant log from running “hostname audit-test.home.private” as root.

type=SYSCALL msg=audit(1358306046.744:260): arch=c000003e syscall=170 success=yes exit=0 a0=2025010 a1=17 a2=7 a3=18 items=0 ppid=23922 pid=26742 auid=1000 uid=0 gid=0 euid=0 suid=0 fsuid=0 egid=0 sgid=0 fsgid=0 tty=pts4 ses=16 comm="hostname" exe="/usr/bin/hostname" subj=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023 key="system-locale"There are gobs of fields listed, however the ones that interest me the most are the various field names containing the letters “id”, “exe” and that ugly string of numbers in the first parens. The first bit, 1358306046.744, is the timestamp of the event in epoch time. The exe field contains the full path tot he binary that was executed. Useful, since we know what was run, but it does not contain the full command line including arguments. Not ideal.

Next we see that the command was run by root, since the euid is 0. Interestingly, the field auid (called audit uid) contains 1000, which is the uid of my regular user account on that host. The auid field actually contains the user id of the original logged in user for this login session. This means, that even though I used “su -” to gain a root shell the auditing subsystem still knows who I am. Using su to gain a root shell has always been the bane of account auditing, but the auditd system records information to usefully identify a user. It does not forgive the lack of command line options, but certainly makes me feel better about it.

These examples, while handy, are also only the tip of the iceberg. One would be hard pressed to find a way to get more detailed audit logging than is available here. To help make our way down the rabbit hole of auditd let’s turn this into a series. We’ll collect ideas for use cases and work up an audit config to meet the requirements, much like what I ended up doing on this security.stackexchange.com answer.

If this sounds like fun let me know in the comments and I’ll work up a way to collect the information. Until then…Happy Auditing!

There will be future auditd posts, so check back regularly on the auditd tag.

Misadventures with tcpdump Filters

For quite some time I’ve been running into a tricksome situation with tcpdump. While doing analysis I kept running into the situation where none of my filters would work right. For example, let’s presume I have an existing capture file that was taken off a mirrored port. According to the manpage for pcap-filter this command is a syntactically valid construction:

tcpdump -nnr capturefile.pcap host 10.10.15.15

It does not, however, produce any output. I can verify that traffic exists for that host by doing:

tcpdump -nnr capturefile.pcap | grep 10.10.15.15

This does in fact produce the results I want, but is a pretty unfortunate work-around. Part of what makes a tool like tcpdump so useful is the highly complex filtering language available.

I finally sucked up my ego and asked some of my fellows in the security.stackexchange chat room, The DMZ. While our conversation wasn’t strictly helpful, since they seemed just as puzzled as me, talking out the problem did help me come up with some better google search terms.

I discovered the problem is entirely to do with 802.1q tagged packets. Since this pcap was taken from a mirrored port of a switch using VLANs it follows all the same rules as a trunked interface. So what that means is that my above filter gets translated as, “Look in the source and destination address fields of the IP header of this standard packet.” Anyone who has had to decode packets, or parse our network traffic, should probably assume that while parsing can be tricky, this lookup shouldn’t be very difficult. I definitely fell into the same boat and boy was I wrong.

My first assumption was that when applying a BPF to a packet capture the following order of events occurred:

- Read in packet

- Parse packet into identifiable tokens

- Check filter strings against tokenized packet

As it turns out, this isn’t what happens at all. Our simple filter above is really just a macro. The host macro will parse the packet, but it is a rather simple parser. By and large this is good, since we want the filters to be fast. In some situations this is bad. For the purposes of discussion let’s make two assumptions:

- The host macro is nothing more than “src host ip or dst host ip”

- The src and dst macros are nothing more than “src = 13th-16th bytes of IP header” and “dst = 17th-20th bytes of IP header”

While the macro language as a whole is really much more complicated, this simplistic view is good enough for this discussion, and in my opinion good enough for normal use.

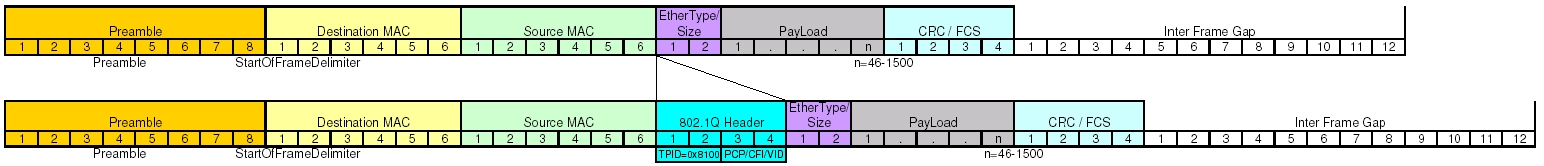

Now that we’re talking about byte offsets it’s time to pull out our handy dandy header references. Since we’re dealing with IP datagrams embedded in Ethernet frames, let’s take a moment to inspect both headers.

As we can see, the Ethernet frame header is pretty well static, and easily understood. The IP datagram header is variable length, but the options are all at the end of the header, so for our purposes today we can consider it a fixed length as well. This makes our calculations very easy and look something like this.

- This is known to be Ethernet, so add up the length of the header fields (22 bytes) and skip past those.

- Source address in the IP header starts at byte offset 13, so check those 4 bytes.

- Destination address in the IP header starts at byte offset 17, so check those 4 bytes.

So now we’re getting somewhere, and we actually run face first into a surprise. Remember that I said my example packet capture was taken off an interface that pass the VLAN information. Take another look at the headers and see if you can identify the field that contains the VLAN tag information. Hint: You won’t because it’s not there.

Enabling VLANs actually do something interesting to your Ethernet frame header. It adds a few extra fields to your header to a total of 4 bytes. In most cases you won’t see this. Generally each switch port has two modes, access and trunk. An access port is one that you would hand out to a user. This will get connected directly to a computer or a standard unmanaged mini-switch. A trunk port is extra special and is often only used either to connect the networking infrastructure or a server that needs to access several different networks. The extra VLAN header information is only useful on over a trunk, and as such is stripped out before the frame is transmitted on an access port. So on an access port, that header doesn’t exist, so your dumb byte offset math works pretty well. Remember at the beginning when I said mirrored ports followed many of the same rules as a trunk? This is where we begin to see it. Let’s now take a look at what happens to the Ethernet frame header when we add in the VLAN tag information.

Knowing that we were doing some dumb parsing by counting byte offsets, and all of our numbers were based on an Ethernet frame without the VLAN information, we should finally begin to understand our problem. We are dealing with an off-by-4 byte error. According to our IP header quick reference we can do a quick offset calculation and see that we’re attempting to compare the source address against the combination of TimeToLive+Type+HeaderChecksum and attempting to compare the destination address against the source address.

You should be thinking, “Now Scott, yes, this a problem, but we’ll still see half of the communication because when we check for destination address we’ll still end up matching against source!” You would be absolutely correct, except for one problem. As I mentioned before the filter isn’t completely as dumb as we’re pretending that it is. The base assumption for BPF is that when you say host, you’re talking about an IP address. So the filter does actually check the version field to see if the packet is IPv4 or IPv6, values 4 or 6 respectively. The IP version field is the higher order nibble of the first byte. Since we have an off-by-4 byte situation what value are we actually checking? The answer is the higher order nibble of the third byte in the VLAN header. This byte contains the 3 bit PCP field and the 1 bit CFI flag. The 3 bit PCP is actually the 802.1p service priority used in Quality of Service systems.

In most cases 802.1p is unused, which means a QoS of 0, which means those 3 bits are unset. The 1 bit CFI flag, also called Drop Eligible or DE, is used by PCP to say that in the presence of QoS based congestion this packet can be dropped. Since 802.1p is generally not used, this field is also typically 0. In normal situations out filter reads the 0, which is neither a 4 nor a 6, and so our filter automatically rejects. However, since the priority and DE fields are set by QoS systems we could have a situation where the filter accidentally works. If ever 802.1p based QoS is used, the DE flag is unset, and the priority is set to 2 on a scale of 0 (best effort) to 7 (highest) the filter will still believe we’re inspecting an IPv4 packet. Or if the priority is set to 3 and the DE flag is unset then the filter will believe we’re looking at an IPv6 packet. This is all a bit of an aside since it has been my experience that QoS is rarely used, however it does present an interesting edge case.

Ignoring any possibility of QoS in play and going back to straight up 802.1q tagged packets what we have to do instead is modify the filter string to tell the BPF to treat tagged packets as tagged, like so:

tcpdump -nnr capturefile.pcap vlan and host 10.10.15.15

What we end up doing here is filtering only for packets containing a VLAN tag and either of the address fields in the IP header contains 10.10.15.15. By explicitly applying the vlan macro the filtering system will properly detect the VLAN header and account for it when processing the other embedded protocols. It is worth noting that this will only match on packets that contain the VLAN header. If you want to generalize your filter, say you don’t know or your capture contains a mix of packets that may or may not have a VLAN tag, you can complicate your filter to do something like this.

tcpdump -nnr capturefile.pcap 'host 10.10.15.15 or ( vlan and host 10.10.15.15 )'

Finding out that VLANs are used on networks that you’re dealing with, and if the infrastructure is any more complicated than a 10 person office it probably does, has some pretty far reaching consequences. Any time one applies pcap filters to a capture you’ll need to take into account 802.11q tags. You’ll definitely want to keep this in mind when using BPF files to distribute load across multiple snort processes or when using BPFs to do targeted analysis using tools like Argus. Depending on the configuration of your interface, your monitoring port may actually have a native vlan. If that is the case you’ll find that you do receive data, which may disguise the fact that you’re not receiving all of the data.

QOTW #32 – How can mini-computers (like Raspberry Pi) be applied to IT security?

This week’s question is nominated by me: How can mini-computers (like Raspberry Pi) be applied to IT security?

There are 2 parts to the question.

1) What practical applications such mini-computers have in IT security, or what role they could play in cyber-defense, network analysis, etc.

2) What risks might they introduce to a local network environment that should be considered?

The top rated answer by Tobias, suggest that mini computers like the Raspberry Pi could be used as cheap penetration testing tools, linking to the Pwnpi distribution, a specialized linux distro for the Raspberry Pi. The answer also links to the Pwnplug, a small compact penetration testing drop box that has a full suite of penetration testing tools.

The answer by Rofls reinforces the answer by Tobius, stating the mini-computers like the Raspberry Pi could be used as cheap, disposable hardware for hacking when coupled with a USB Wireless Adapter. The hacker would only lose a small amount of money if the Raspberry Pi was discovered.

The answer by Diarmaid links to IPFire, which can turn the Raspberry Pi into a cheap.firewall or IDS/IPS.

adamo also suggest that the Pi can be used to build cheap sensor networks to monitor Wifi, Ethernet or Bluetooth. He also suggest that the Pi can be used as emergency DHCP or DNS servers in situations where a quick fix is needed.

The Raspberry Pi is an example of a growing class of cheap hardware that can be used for hacking and penetration testing purposes. HakShop has a great set of cheap hardware that can perform task that used to require dedicated equipment costing thousands of dollars. This makes the threat of hacking even more prevalent as more users are able to afford the hardware needed to perform specialised task.

This is clearly going to present a new and interesting challenge for security professionals to defend against. I look forward to seeing what solutions the many bright minds in this industry can come out with.

Liked this question of the week? Interested in reading it or adding an answer? See the question in full. Have questions of a security nature of your own? Security expert and want to help others? Come and join us at security.stackexchange.com.

QotW #27: Open Source vs Closed Source Systems

Question of the Week number 27 is a contentious, hotly debated issue in the software world. The question itself was posed by Security.SE user blunders, who quoted the argument often used in the defence of open source as a business model:

My understanding is that open source systems are commonly believed to be more secure than closed source systems. … A common position against closed source systems is that a lack of awareness is at best a weak security measure; commonly referred to as security through obscurity.

and then we hit the question itself:

Question is, are open source systems on average better for security than closed source systems?

We’ll begin with the top voted answer by SE user Jesper Mortensen, who explained that the whole notion of being able to generally compare open versus closed source systems is a bad one when there are so many other factors involved. To compare two systems you really need to look beyond the licensing model they use, and look at other factors too. I’ll quote Jesper’s list in its entirety:

- Licenses.

- Access to source code.

- Very different incentive structures, for-profit versus for fun.

- Very different legal liability situations.

- Different, and wildly varying, team sizes and team skillsets.

Of course, this is by no means a complete treatment of the possible differences.

Jesper also highlighted the importance of comparing pieces of software that solve specific domain issues – not software in general. You have to do this, to even remotely begin to utilise the list above.

Security.SE legend Thomas Pornin also answered this question. I’ll begin coverage of his answer with his summary:

… the “opensource implies security” idea is overrated. What is important is the time (and skill) devoted to the tracking and fixing of security issues, and this is mostly orthogonal to the question of openness of the source.

The main thrust of Thomas’ answer was that actually, maintained software is more secure than unmaintained software. As an example, Thomas cited OpenSSL remote execution bugs that had been left lying in the code tree unfixed for some time – highlighting a possible advantage of closed source systems in that, when developed by companies, the effort and time spent on Q&A is generally higher than open source systems.

Thomas’ answer also covers the counterpoint to this – that closed source systems can easily conceal security issues, too, and that having the source allows you to convince yourself of security more easily.

The next answer was provided by Ori, who lists a set of premises used for justifying the security of open source:

- The Customization premise

- The License Management premise

- The Open Format premise

- The Many Eyes premise

- The Quick Fix premise

As Ori rightly says, the customization premise means a company can take an open source platform and add an additional set of security controls. Ori quotes NSA’s SELinux as an example of such a project. For companies with the time and money to produce such platforms and make such fixes, this is clearly an advantage for open source systems.

For license management and open format arguments Ori covers from a compliance and resilience perspective. Using open source software (and making modifications) contains certain license constraints – the potential to violate these constraints is clearly a risk to the business. Likewise, for business continuity purposes the ability to not be locked in to a specific platform is a huge win for any company.

Finally, an answer by yours truly. The major thrust of my answer is succinctly summarised by AviD‘s comment on it:

I’ve always proposed an amendment to Linus’ Law: “Given enough trained eyeballs, most bugs are relatively shallow”

I explained, through use of a rather intriguing vulnerability introduced into development kernels by a compiler bug, that having the knowledge to detect these issues is critical to security. The source being available does not directly guarantee you have the knowledge to detect such issues.

That’s it for answers. As you can see, none of us took sides generally on the “open versus closed” debate, instead pointing out that there are many factors to consider beyond the license under which source is available. I think the whole set of answers is best summarised by this.josh‘s comment on the top voted answer – so I’ll leave you with that:

I agree. What matters most is how many people with knowledge and experience in the security domain actively design, implement, test, and maintain the software. Any project where no-one is looking at security will have significant vulnerabilities, regardless of how many people are on the project.

Liked this question of the week? Interested in reading it or adding an answer? See the question in full. Have questions of a security nature of your own? Security expert and want to help others? Come and join us at security.stackexchange.com.

QotW #24: Why do people tell me not to use VLANs for security?

This week’s question of the week was asked by user jtnire who was asking a question very near and dear to those security professionals who came out of networking or systems backgrounds. He was doing some network design and came across a classic statement that, “VLANs are Not a Security Tool”.

As of this writing, jtnire had not accepted any answers, however user Rory McCune was leading the pack of answers. Rory focused primarily on the classic human problem of misconfiguration, particularly easy when we’re talking about typing Gi/0/4 when you meant Gi/1/4, as opposed to plugging a cable into the wrong port. He also specifically called out VLAN Hopping, which can abuse a misconfiguration to allow a malicious user access to a non-authorized VLAN.

User and moderator Rory Alsop, speaking from an audit perspective, expanded on what the other Rory mentioned and focused more generally on what would make him double-take. He pointed out that VLANs are generally used for cheap network segmentation and that if you’re using them for as a security tool, then you probably want to do it right and use a physically isolated network instead.

Jakob Borg came in with a completely different approach. He explained that, as an ISP, VLANs are a crucial component of their environment and when done right can be a very powerful tool from both a security and service prospective. User jliendo largely agreed with Jakob that configuration is king, and when configured properly is an excellent tool in your security arsenal. He also went into more technical detail about some of the possible attacks against VLANs and how they can be mitigated.

In this author’s opinion this is a fantastic question as VLANs are becoming an extremely common mechanism for network isolation. The answers also did a great job of coming at the problem from all manner of angles, from external auditors to in the trenches technicians.

Liked this question of the week? Interested in reading it or adding an answer? See the question in full. Have questions of a security nature of your own? Security expert and want to help others? Come and join us at security.stackexchange.com.