Archive for August, 2012

Misadventures with tcpdump Filters

For quite some time I’ve been running into a tricksome situation with tcpdump. While doing analysis I kept running into the situation where none of my filters would work right. For example, let’s presume I have an existing capture file that was taken off a mirrored port. According to the manpage for pcap-filter this command is a syntactically valid construction:

tcpdump -nnr capturefile.pcap host 10.10.15.15

It does not, however, produce any output. I can verify that traffic exists for that host by doing:

tcpdump -nnr capturefile.pcap | grep 10.10.15.15

This does in fact produce the results I want, but is a pretty unfortunate work-around. Part of what makes a tool like tcpdump so useful is the highly complex filtering language available.

I finally sucked up my ego and asked some of my fellows in the security.stackexchange chat room, The DMZ. While our conversation wasn’t strictly helpful, since they seemed just as puzzled as me, talking out the problem did help me come up with some better google search terms.

I discovered the problem is entirely to do with 802.1q tagged packets. Since this pcap was taken from a mirrored port of a switch using VLANs it follows all the same rules as a trunked interface. So what that means is that my above filter gets translated as, “Look in the source and destination address fields of the IP header of this standard packet.” Anyone who has had to decode packets, or parse our network traffic, should probably assume that while parsing can be tricky, this lookup shouldn’t be very difficult. I definitely fell into the same boat and boy was I wrong.

My first assumption was that when applying a BPF to a packet capture the following order of events occurred:

- Read in packet

- Parse packet into identifiable tokens

- Check filter strings against tokenized packet

As it turns out, this isn’t what happens at all. Our simple filter above is really just a macro. The host macro will parse the packet, but it is a rather simple parser. By and large this is good, since we want the filters to be fast. In some situations this is bad. For the purposes of discussion let’s make two assumptions:

- The host macro is nothing more than “src host ip or dst host ip”

- The src and dst macros are nothing more than “src = 13th-16th bytes of IP header” and “dst = 17th-20th bytes of IP header”

While the macro language as a whole is really much more complicated, this simplistic view is good enough for this discussion, and in my opinion good enough for normal use.

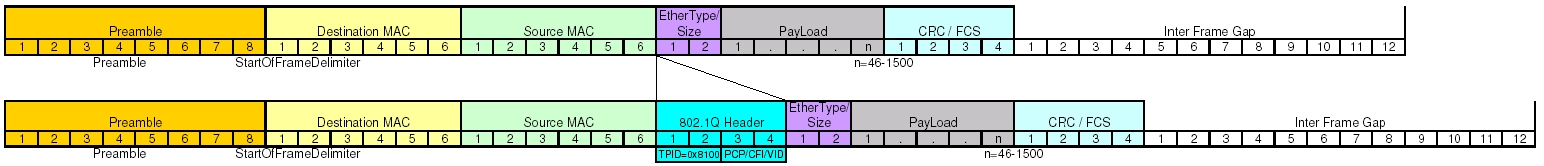

Now that we’re talking about byte offsets it’s time to pull out our handy dandy header references. Since we’re dealing with IP datagrams embedded in Ethernet frames, let’s take a moment to inspect both headers.

As we can see, the Ethernet frame header is pretty well static, and easily understood. The IP datagram header is variable length, but the options are all at the end of the header, so for our purposes today we can consider it a fixed length as well. This makes our calculations very easy and look something like this.

- This is known to be Ethernet, so add up the length of the header fields (22 bytes) and skip past those.

- Source address in the IP header starts at byte offset 13, so check those 4 bytes.

- Destination address in the IP header starts at byte offset 17, so check those 4 bytes.

So now we’re getting somewhere, and we actually run face first into a surprise. Remember that I said my example packet capture was taken off an interface that pass the VLAN information. Take another look at the headers and see if you can identify the field that contains the VLAN tag information. Hint: You won’t because it’s not there.

Enabling VLANs actually do something interesting to your Ethernet frame header. It adds a few extra fields to your header to a total of 4 bytes. In most cases you won’t see this. Generally each switch port has two modes, access and trunk. An access port is one that you would hand out to a user. This will get connected directly to a computer or a standard unmanaged mini-switch. A trunk port is extra special and is often only used either to connect the networking infrastructure or a server that needs to access several different networks. The extra VLAN header information is only useful on over a trunk, and as such is stripped out before the frame is transmitted on an access port. So on an access port, that header doesn’t exist, so your dumb byte offset math works pretty well. Remember at the beginning when I said mirrored ports followed many of the same rules as a trunk? This is where we begin to see it. Let’s now take a look at what happens to the Ethernet frame header when we add in the VLAN tag information.

Knowing that we were doing some dumb parsing by counting byte offsets, and all of our numbers were based on an Ethernet frame without the VLAN information, we should finally begin to understand our problem. We are dealing with an off-by-4 byte error. According to our IP header quick reference we can do a quick offset calculation and see that we’re attempting to compare the source address against the combination of TimeToLive+Type+HeaderChecksum and attempting to compare the destination address against the source address.

You should be thinking, “Now Scott, yes, this a problem, but we’ll still see half of the communication because when we check for destination address we’ll still end up matching against source!” You would be absolutely correct, except for one problem. As I mentioned before the filter isn’t completely as dumb as we’re pretending that it is. The base assumption for BPF is that when you say host, you’re talking about an IP address. So the filter does actually check the version field to see if the packet is IPv4 or IPv6, values 4 or 6 respectively. The IP version field is the higher order nibble of the first byte. Since we have an off-by-4 byte situation what value are we actually checking? The answer is the higher order nibble of the third byte in the VLAN header. This byte contains the 3 bit PCP field and the 1 bit CFI flag. The 3 bit PCP is actually the 802.1p service priority used in Quality of Service systems.

In most cases 802.1p is unused, which means a QoS of 0, which means those 3 bits are unset. The 1 bit CFI flag, also called Drop Eligible or DE, is used by PCP to say that in the presence of QoS based congestion this packet can be dropped. Since 802.1p is generally not used, this field is also typically 0. In normal situations out filter reads the 0, which is neither a 4 nor a 6, and so our filter automatically rejects. However, since the priority and DE fields are set by QoS systems we could have a situation where the filter accidentally works. If ever 802.1p based QoS is used, the DE flag is unset, and the priority is set to 2 on a scale of 0 (best effort) to 7 (highest) the filter will still believe we’re inspecting an IPv4 packet. Or if the priority is set to 3 and the DE flag is unset then the filter will believe we’re looking at an IPv6 packet. This is all a bit of an aside since it has been my experience that QoS is rarely used, however it does present an interesting edge case.

Ignoring any possibility of QoS in play and going back to straight up 802.1q tagged packets what we have to do instead is modify the filter string to tell the BPF to treat tagged packets as tagged, like so:

tcpdump -nnr capturefile.pcap vlan and host 10.10.15.15

What we end up doing here is filtering only for packets containing a VLAN tag and either of the address fields in the IP header contains 10.10.15.15. By explicitly applying the vlan macro the filtering system will properly detect the VLAN header and account for it when processing the other embedded protocols. It is worth noting that this will only match on packets that contain the VLAN header. If you want to generalize your filter, say you don’t know or your capture contains a mix of packets that may or may not have a VLAN tag, you can complicate your filter to do something like this.

tcpdump -nnr capturefile.pcap 'host 10.10.15.15 or ( vlan and host 10.10.15.15 )'

Finding out that VLANs are used on networks that you’re dealing with, and if the infrastructure is any more complicated than a 10 person office it probably does, has some pretty far reaching consequences. Any time one applies pcap filters to a capture you’ll need to take into account 802.11q tags. You’ll definitely want to keep this in mind when using BPF files to distribute load across multiple snort processes or when using BPFs to do targeted analysis using tools like Argus. Depending on the configuration of your interface, your monitoring port may actually have a native vlan. If that is the case you’ll find that you do receive data, which may disguise the fact that you’re not receiving all of the data.

QOTW #33 – Communications infrastructure after a nuclear explosion

A few weeks ago, I was sat pondering nuclear bombs. To any of you who frequent the DMZ, this shouldn’t be much of a surprise, as it’s pretty much par-for-the-course for my imagination on a Wednesday night. I started thinking about the electromagnetic pulse released from a nuclear explosion, and how it might affect electronic devices. “But surely,” I thought, “a nuclear bomb would negate any interesting post-blast resilience implications by turning all local electronic devices into glowing dust!”. How wrong I was.

After a bit of research, I came across High-Altitude Nuclear Explosions (HANE). No, this isn’t the title of the latest Michael Bay movie, but rather the concept of detonating a nuclear bomb at an altitude greater than 30km. Whilst this might sound rather ridiculous, certain countries have actually done it. In the early 60s, the USA and Russia performed a series of HANE tests, in order to better understand the potential for anti-satellite weaponry. These tests showed that the electromagnetic pulse and ensuing radioactive fallout was capable of destroying or damaging satellites in space rather indiscriminately. The tests caused numerous satellites to fail after a short period of time, due to severe radiation damage. What’s really interesting about HANE, though, is that the explosion releases a giant electromagnetic pulse, without the hassle of re-enacting that dream scene from Terminator 2 where Sarah Connor’s skeleton is left clinging onto a chain-link fence.

To quote the Wikipedia article:

This high-altitude EMP occurs between 30 and 50 kilometers (18 and 31 miles) above the Earth’s surface. The potential as an anti-satellite weapon became apparent in August 1958 during Hardtack Teak (a HANE test). The EMP observed at the Apia Observatory at Samoa was four times more powerful than any created by solar storms, while in July 1962 the Starfish Prime test damaged electronics in Honolulu and New Zealand (approximately 1,300 kilometers away), fused 300 street lights on Oahu (Hawaii), set off about 100 burglar alarms, and caused the failure of a microwave repeating station on Kauai, which cut off the sturdy telephone system from the other Hawaiian islands.

That’s one hell of an electromagnetic pulse!

After I’d got over the initial excitement of learning that it is actually theoretically possible to sizzle microchips from space using nukes, I decided to post a question on Security SE. In short, I wanted to know how worldwide communications infrastructure, especially the Internet, was protected from such attacks. This is where our resident nuclear weapons “enthusiast” Thomas Pornin came in, with a wonderfully detailed answer.

It turns out that the Internet is, like a condom machine in the Vatican, amazingly redundant. Obviously not quite the same kind of redundant, but redundant nonetheless. If a network link goes down, packets are routed through other links. It’s a self-healing network. This means that you would have to knock out multiple inter-continental network links in order to cause severe disruption. Furthermore, it has plenty of redundant channels that are likely to still function after a large EMP:

- Point-to-point radio links (e.g. microwave)

- IP over Radio (e.g. AX.25)

- Satellites

- Optical fibre cable

- Free-space optical communication (e.g. laser links)

- Brian Blessed bellowing binary from a tall building.

An interesting issue with radio and satellite communication is temporary disturbance of the ionosphere. The electromagnetic pulse would cause electrons to be jiggled about in the upper atmosphere, creating areas of increased and decreased electron density. A lot of long-range radio communication relies on “bouncing” radio waves off of the ionosphere, which means that such communications are likely to suffer strong interference. Longer wavelength signals are likely to be disrupted more than short wavelength (GHz) signals, due to some interesting physics wizardry I won’t go into.

Of course, in the case of a real war, we’d have to worry about anti-satellite weapons knocking out communications too. Thankfully though, under-sea cables are shielded by a big blanket of water, which absorbs electromagnetic radiation. This is why submarines send up a radio mast or buoy. The good news is that our fastest and most reliable network links will actually end up being our most resilient, because they’re on the sea bed. Furthermore, optical fiber cable is not affected by electromagnetic induction, like copper wiring is. So, if all hell does break loose, the phat-pipes should still be able to serve you your daily dose of StackExchange! Yay!

But not everything relies on hardware interlinks. We also have to take into account the systems that facilitate the core functionality of the internet. An interesting example of this is DNS. There are thirteen root nameservers that facilitate the absolute top tier of DNS functionality. Without the root name servers, we wouldn’t be able to reliably translate domain names into IP addresses. Think of them as a set of mirrors for a global directory, where top-level domains (TLDs) are indexed. These entries point to other DNS servers, which point to other DNS servers, etc. We’re left with a large hierarchical map of DNS resolution. Now, whilst thirteen servers sounds rather flimsy, the actual number of servers involved is much larger. A neat technique called anycast allows multiple physical servers across the world to represent a single root name server. This is not the same as cloud computing, which is often touted as a panacea for uptime-critical solutions. Guess what? The cloud is not redundant! But I digress. In order to take down the entire DNS system, you’d need to nuke Japan, England, France, Germany, most of eastern Europe and the entire east and west coasts of America into oblivion. At that point, I don’t think the remaining radiation-resistant insects would be particularly interested in whether the DNS system is functioning or not.

The biggest threat HANE poses to our infrastructure is to our power grid. The world is coated with a mesh of power wiring, stretching over hundreds of miles of land. Since the cables are so long, and are very likely to cut through the magnetic lines of flux in a perpendicular manner, the induced currents could be massive. Local substations would burn out, street lighting would fry, circuit breakers would pop, and fuses would blow. Without power, our worries about communications infrastructure being disrupted would be rather pointless. The servers that host anything worth accessing would be down anyway, and it’d be likely that you couldn’t boot your PC or charge your laptop, due to lack of power. Even when sections of the power grid come back online, the demand would be massive, causing further outages and brown-outs.

So, how can we protect ourselves? Here’s a few ideas:

- Wrap everything important in a giant Faraday cage. Not exactly practical for power plants or datacenters, though.

- Ensure that significant chunks of the world’s communications infrastructure is built around trans-oceanic cables and optical links.

- Ensure that nation-critical services are distributed globally, with the ability to self-heal if remote systems become unreachable.

- Be prepared to switch to lo-tech communications in the event of such a disaster.

Perhaps the thing to take away from this analysis is that nukes are dangerous, and there’s not much you can do to prevent their aftermath. The best policy is to not detonate nukes at high altitude, or if possible, not to detonate nukes at all.

Nukes are bad, m’kay.

Liked this question of the week? Interested in reading it or adding an answer? See the question in full. Have questions of a security nature of your own? Security expert and want to help others? Come and join us at security.stackexchange.com.

Confidentiality, Integrity, Availability: The three components of the CIA Triad

In this post, I shall be exploring one of the fundamental concepts of security that should be familiar with most security professionals and students: the CIA triad.

What is the CIA triad? No, CIA in this case is not referring to the Central Intelligence Agency. CIA refers to Confidentiality, Integrity and Availability. Confidentiality of information, integrity of information and availability of information. Many security measures are designed to protect one or more facets of the CIA triad. I shall be exploring some of them in this post.

Confidentiality

When we talk about confidentiality of information, we are talking about protecting the information from disclosure to unauthorized parties.

Information has value, especially in today’s world. Bank account statements, personal information, credit card numbers, trade secrets, government documents. Every one has information they wish to keep a secret. Protecting such information is a very major part of information security.

A very key component of protecting information confidentiality would be encryption. Encryption ensures that only the right people (people who knows the key) can read the information. Encryption is VERY widespread in today’s environment and can be found in almost every major protocol in use. A very prominent example will be SSL/TLS, a security protocol for communications over the internet that has been used in conjunction with a large number of internet protocols to ensure security.

Other ways to ensure information confidentiality include enforcing file permissions and access control list to restrict access to sensitive information.

Keeping valuable algorithms secret

This is an excellent question on Security.Stackexchange that covers how to keep important information confidential. Similar questions can be found here.

Integrity

Integrity of information refers to protecting information from being modified by unauthorized parties.

Information only has value if it is correct. Information that has been tampered with could prove costly. For example, if you were sending an online money transfer for $100, but the information was tampered in such a way that you actually sent $10,000, it could prove to be very costly for you.

As with data confidentiality, cryptography plays a very major role in ensuring data integrity. Commonly used methods to protect data integrity includes hashing the data you receive and comparing it with the hash of the original message. However, this means that the hash of the original data must be provided to you in a secure fashion. More convenient methods would be to use existing schemes such as GPG to digitally sign the data.

Why aren’t application downloads routinely done over HTTPS?

This is a question regarding data integrity, with several suggestions on how to protect data integrity. You can find more questions with the integrity tag here.

Availability

Availability of information refers to ensuring that authorized parties are able to access the information when needed.

Information only has value if the right people can access it at the right times. Denying access to information has become a very common attack nowadays. Almost every week you can find news about high profile websites being taken down by DDoS attacks. The primary aim of DDoS attacks is to deny users of the website access to the resources of the website. Such downtime can be very costly. Other factors that could lead to lack of availability to important information may include accidents such as power outages or natural disasters such as floods.

How does one ensure data availability? Backup is key. Regularly doing off-site backups can limit the damage caused by damage to hard drives or natural disasters. For information services that is highly critical, redundancy might be appropriate. Having a off-site location ready to restore services in case anything happens to your primary data centers will heavily reduce the downtime in case of anything happens.

Conclusion

The CIA triad is a very fundamental concept in security. Often, ensuring that the three facets of the CIA triad is protected is an important step in designing any secure system. However, it has been suggested that the CIA triad is not enough. Alternative models such as the Parkerian hexad (Confidentiality, Possession or Control, Integrity, Authenticity, Availability and Utility) have been proposed. Other factors besides the three facets of the CIA triad are also very important in certain scenarios, such as non-repudiation. There have been debates over the pros and cons of such alternative models, but it is a post for another time.

Thank you for reading.

Exploiting ATMs: a quick overview of recent hacks

A few weeks ago, Kyle Rozendo asked a question on the IT Security StackExchange about Cracking a PCI terminal using a trojan based on the card. It caught my attention, so I started digging a little deeper into this matter.

There are some difficulties involved in hacking an ATM:

- Often proprietary software

- Often custom OS or modified embedded Windows

This means a high level of understanding is necessary, as well as access to ATMs to test on. All of the attacks I’ve dug up had some level of inside information before they were constructed.

2009: Diebold gets targeted by Skimer-A Trojan

One of the first serious hacks I came by was a Trojan found in ATMs in eastern Europe around 2009. As reported by Sophos, the attack was aimed at Diebold Opteva ATMs.

The Trojan was named Skimer-A. It’s main goals were:

The Trojan was named Skimer-A. It’s main goals were:

- Steal information (card numbers and PINs)

- Allow remote access

- Drop more malware

The hack required physical access to the machine. The perpetrators used social engineering, to persuade stores to allow them physical access to the machine after hours, so they could install the virus. After an analysis of the malware, Diebold concluded the attackers also had to have inside information about the systems. A lot of the functions used to extract information were part of the ATMs operation software, but were never documented. They also knew administrative passwords and unlocked the custom Windows CE version Diebold used as well as misconfiguring its firewall. (This was concluded from the security update by Diebold.)

2010: ATM Jackpotting by Barnaby Jack

In 2010, McAfee security expert, Barnaby Jack presented his “ATM Jackpotting” at Blackhat. He was able, after careful analysis with physical access to a few teller machines, to write a tool that could remotely exploit an ATM and patch it so you can call a custom menu with an access code or remotely start emptying the ATM’s money cassettes (hence Jackpotting).

The attack is aimed at standalone and hole-in-the-wall ATMs. The ATMs often run:

- ARM/XSCALE processor

- Windows CE

- TCP/IP, Dial Up or CDMA wireless

- Support for SSL

- 3DES encrypted pin pad

In his research he used 3 different ATMs (he ordered these and got them delivered at home). He started his research by looking at the internal workings and, although there were some security measures in place, once a he had physical access many possibilities started to appear. He started by looking for a way to modify the boot sequence, because the ATM boots into its proprietary software. This means he has to patch the system so he can get access to a shell. He accomplished this by using a JTAG debugger.

Using the JTAG module, he was able to send a break when starting the difference services. After this he could launch a proper shell.

This work was all necessary to reverse engineer the software and develop the actual attacks:

- Walk up attack by “upgrading” the firmware with a flashcard (this required physical access, and a key to open the machine and access the motherboard – such keys are standard, and easy to find on the Internet).

- Remote configuration attack, firmware can be upgraded remotely

The latter is the most interesting attack, but there are some security defenses in place that make a bruteforce attack impossible. However Barnaby Jack was able to find a vulnerability in the authentication mechanism which allowed him to log in to the machine. He wrote a tool to do these attacks, named “Dillinger”. Now the problem he faced was how to find the ATMs on the internet.

Whilst ATMs support TCP/IP, about 95% of all ATMs still connect to the internet using Dial Up. This means War Dialing using a VOIP tool like WarVox, makes it possible to go and find ATMs on the net. Most of the ATMs use a proprietary protocol, so once you identify this protocol you know an ATM is listening on the other side and you can go and try to exploit it.

Once you have access to the ATM you can spawn a shell and install a rootkit. You will still need to identify where the ATM is physically located so you can go and collect the money. This is done by reading the configuration file (often the address is present on the receipts).

The rootkit to keep access to the teller is called “Scrooge”. It hides itself on the machine. One difficulty is that the kit needs to be modified for almost every version of ATM software that’s running because of different peripherals and non-standard ways to communicate. After installing the kit you can walk up to the ATM and enter a keys equence on the keypad, this brings up a custom menu that allows you to jackpot the ATM (completely empty it) or give you a specific amount of cash. This can also be done remotely.

Barnaby suggests following countermeasures:

- Better physical locks

- Executable signing at the kernel level

- Implement Trusted Environment

- Put them on a seperate, firewalled network

- Disable the Remote Management System if you aren’t using it

- More and better code auditing

You can find the complete presentation on Vimeo.

2012: MWR InfoSecurity reveals chip and PIN vulnerability

Chip and PIN is a system where one can insert his banking or credit card into a small machine and make an electronic payment. In the U.K. there is a government backed initiative to make these as widespread as possible. MWR InfoSecurity, a Basingstoke (U.K.) based security company, revealed a way to attack these terminals with a custom PIN card.

The attacks demonstrated at Blackhat 2012:

The attacks demonstrated at Blackhat 2012:

- Producing a fake receipt, making a cashier think the payment was successful

- Infect PIN entry devices to collect card data and harvest these with another rogue card

- Network and interface attack

Apparently the exploits involved were present in normal computers more than a decade ago, making you wonder why this problem was ignored or went undetected. Especially when Cambridge University researchers warned banks of the lack of security in these type of machines as early as 2010. Issues included unencrypted and unauthenticated communication between terminal and remote administration server, which makes a man in the middle attack dead easy. At the moment of writing there hasn’t appeared any white paper (I’m aware of or had access to). The devices affected were produced by VeriFone.

Conclusion

If we look at the attacks over time, it becomes clear that they can be deployed faster and faster. The hacks still require a high level of knowledge and understanding of these systems, but because there are some really basic security issues like bad code reviewing, unencrypted/unauthenticated communication and bad physical security, the attacks are seemingly easy to deploy. It’s up to the producers of these machines to start securing them. Companies still rely too much on security through obscurity and do not expect an attack because a hacker would need insider information. Previous articles suggest that it’s not extremely hard to get that information.

Sources:

- Geoff White,Channel 4,Credit card readers can be hacked for details, 29 July 2012

- Anonymous, Infosecurity, Russians hack Diebold ATM software, 19 March 2009

- Anonymous, Sophos, Troj/Skimer-A, 17 March 2009

- Pat Carroll, Finextra, Protecting Pin Pad Payment, 18 July 2012

- Vanja Svajcer, Naked Security, Credit card skimming malware targeting ATMs, 17 March 2009

- Graham Cluley, Naked Security, Is there malware lurking in your ATM?, 17 March 2009

- Graham Cluley , Naked Security, More details on the Diebold ATM Trojan horse case, 18 March 2009

- Warwick Ashford, Computer Weekly, BlackHat 2012: UK firm MWR InfoSecurity reveals chip and PIN vulnerability, 26 July 2012

QOTW #32 – How can mini-computers (like Raspberry Pi) be applied to IT security?

This week’s question is nominated by me: How can mini-computers (like Raspberry Pi) be applied to IT security?

There are 2 parts to the question.

1) What practical applications such mini-computers have in IT security, or what role they could play in cyber-defense, network analysis, etc.

2) What risks might they introduce to a local network environment that should be considered?

The top rated answer by Tobias, suggest that mini computers like the Raspberry Pi could be used as cheap penetration testing tools, linking to the Pwnpi distribution, a specialized linux distro for the Raspberry Pi. The answer also links to the Pwnplug, a small compact penetration testing drop box that has a full suite of penetration testing tools.

The answer by Rofls reinforces the answer by Tobius, stating the mini-computers like the Raspberry Pi could be used as cheap, disposable hardware for hacking when coupled with a USB Wireless Adapter. The hacker would only lose a small amount of money if the Raspberry Pi was discovered.

The answer by Diarmaid links to IPFire, which can turn the Raspberry Pi into a cheap.firewall or IDS/IPS.

adamo also suggest that the Pi can be used to build cheap sensor networks to monitor Wifi, Ethernet or Bluetooth. He also suggest that the Pi can be used as emergency DHCP or DNS servers in situations where a quick fix is needed.

The Raspberry Pi is an example of a growing class of cheap hardware that can be used for hacking and penetration testing purposes. HakShop has a great set of cheap hardware that can perform task that used to require dedicated equipment costing thousands of dollars. This makes the threat of hacking even more prevalent as more users are able to afford the hardware needed to perform specialised task.

This is clearly going to present a new and interesting challenge for security professionals to defend against. I look forward to seeing what solutions the many bright minds in this industry can come out with.

Liked this question of the week? Interested in reading it or adding an answer? See the question in full. Have questions of a security nature of your own? Security expert and want to help others? Come and join us at security.stackexchange.com.