Community member Iszi nominated this week’s question, which asks for an explanation of the issue from the perspective of a developer/engineer: What is exactly being exploited and why does it work?

Polynomial provided the following detailed, technical answer:

CVE-2012-4969, aka the latest IE 0-day, is a based on a use-after-free bug in IE’s rendering engine. A use-after-free occurs when a dynamically allocated block of memory is used after it has been disposed of (i.e. freed). Such a bug can be exploited by creating a situation where an internal structure contains pointers to sensitive memory locations (e.g. the stack or executable heap blocks) in a way that causes the program to copy shellcode into an executable area.

In this case, the problem is with the CMshtmlEd::Exec function in mshtml.dll. The CMshtmlEd object is freed unexpectedly, then the Exec method is called on it after the free operation.

First, I’d like to cover some theory. If you already know how use-after-free works, then feel free to skip ahead.

At a low level, a class can be equated to a memory region that contains its state (e.g. fields, properties, internal variables, etc) and a set of functions that operate on it. The functions actually take a “hidden” parameter, which points to the memory region that contains the instance state.

For example (excuse my terrible pseudo-C++):

class Account

{

int balance = 0;

int transactionCount = 0;

void Account::AddBalance(int amount)

{

balance += amount;

transactionCount++;

}

void Account::SubtractBalance(int amount)

{

balance -= amount;

transactionCount++;

}

}

The above can actually be represented as the following:

private struct Account

{

int balance = 0;

int transactionCount = 0;

}

public void* Account_Create()

{

Account* account = (Account)malloc(sizeof(Account));

account->balance = 0;

account->transactionCount = 0;

return (void)account;

}

public void Account_Destroy(void* instance)

{

free(instance);

}

public void Account_AddBalance(void* instance, int amount)

{

((Account)instance)->balance += amount;

((Account)Account)->transactionCount++;

}

public void Account_SubtractBalance(void* instance, int amount)

{

((Account)instance)->balance -= amount;

((Account)instance)->transactionCount++;

}

public int Account_GetBalance(void* instance)

{

return ((Account)instance)->balance;

}

public int Account_GetTransactionCount(void instance)

{

return ((Account*)instance)->transactionCount;

}

I’m using void* to demonstrate the opaque nature of the reference, but that’s not really important. The point is that we don’t want anyone to be able to alter the Account struct manually, otherwise they could add money arbitrarily, or modify the balance without increasing the transaction counter.

Now, imagine we do something like this:

Account_Destroy(myAccount); // ...void* myAccount = Account_Create(); Account_AddBalance(myAccount, 100); Account_SubtractBalance(myAccount, 75); // ... if(Account_GetBalance(myAccount) > 1000) // <-- !!! use after free !!! ApproveLoan();Now, by the time we reach

Account_GetBalance, the pointer value in myAccount actually points to memory that is in an indeterminate state. Now, imagine we can do the following:

- Trigger the call to

Account_Destroyreliably. - Execute any operation after

Account_Destroybut beforeAccount_GetBalancethat allows us to allocate a reasonable amount of memory, with contents of our choosing.

Usually, these calls are triggered in different places, so it’s not too difficult to achieve this. Now, here’s what happens:

Account_Createallocates an 8-byte block of memory (4 bytes for each field) and returns a pointer to it. This pointer is now stored in themyAccountvariable.Account_Destroyfrees the memory. ThemyAccountvariable still points to the same memory address.- We trigger our memory allocation, containing repeating blocks of

39 05 00 00 01 00 00 00. This pattern correlates tobalance = 1337andtransactionCount = 1. Since the old memory block is now marked as free, it is very likely that the memory manager will write our new memory over the old memory block. Account_GetBalanceis called, expecting to point to anAccountstruct. In actuality, it points to our overwritten memory block, resulting in our balance actually being 1337, so the loan is approved!

This is all a simplification, of course, and real classes create rather more obtuse and complex code. The point is that a class instance is really just a pointer to a block of data, and class methods are just the same as any other function, but they “silently” accept a pointer to the instance as a parameter.

This principle can be extended to control values on the stack, which in turn causes program execution to be modified. Usually, the goal is to drop shellcode on the stack, then overwrite a return address such that it now points to a jmp esp instruction, which then runs the shellcode.

This trick works on non-DEP machines, but when DEP is enabled it prevents execution of the stack. Instead, the shellcode must be designed using Return-Oriented Programming (ROP), which uses small blocks of legitimate code from the application and its modules to perform an API call, in order to bypass DEP.

Anyway, I’m going off-topic a bit, so let’s get into the juicy details of CVE-2012-4969!

In the wild, the payload was dropped via a packed Flash file, designed to exploit the Java vulnerability and the new IE bug in one go. There’s also been some interesting analysis of it by AlienVault.

The metasploit module says the following:

> This module exploits a vulnerability found in Microsoft Internet Explorer (MSIE). When rendering an HTML page, the CMshtmlEd object gets deleted in an unexpected manner, but the same memory is reused again later in the CMshtmlEd::Exec() function, leading to a use-after-free condition.

There’s also an interesting blog post about the bug, albeit in rather poor English – I believe the author is Chinese. Anyway, the blog post goes into some detail:

> When the execCommand function of IE execute a command event, will allocated the corresponding CMshtmlEd object by AddCommandTarget function, and then call mshtml@CMshtmlEd::Exec() function execution. But, after the execCommand function to add the corresponding event, will immediately trigger and call the corresponding event function. Through the document.write("L") function to rewrite html in the corresponding event function be called. Thereby lead IE call CHTMLEditor::DeleteCommandTarget to release the original applied object of CMshtmlEd, and then cause triggered the used-after-free vulnerability when behind execute the msheml!CMshtmlEd::Exec() function.

Let’s see if we can parse that into something a little more readable:

- An event is applied to an element in the document.

- The event executes, via

execCommand, which allocates aCMshtmlEdobject via theAddCommandTargetfunction. - The target event uses

document.writeto modify the page. - The event is no longer needed, so the

CMshtmlEdobject is freed viaCHTMLEditor::DeleteCommandTarget. execCommandlater callsCMshtmlEd::Exec()on that object, after it has been freed.

Part of the code at the crash site looks like this:

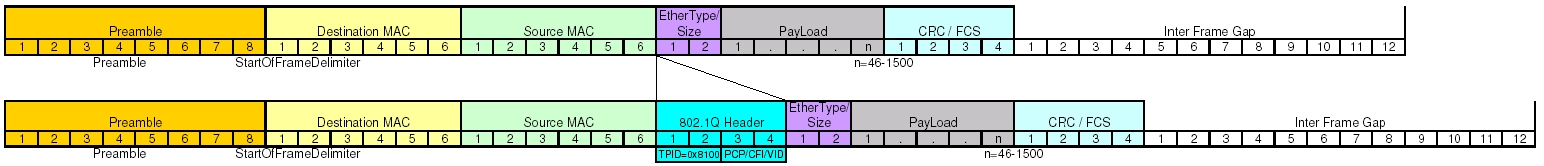

637d464e 8b07 mov eax,dword ptr [edi] 637d4650 57 push edi 637d4651 ff5008 call dword ptr [eax+8]The use-after-free allows the attacker to control the value of

edi, which can be modified to point at memory that the attacker controls. Let’s say that we can insert arbitrary code into memory at 01234f00, via a memory allocation. We populate the data as follows:

01234f00: 01234f08 01234f04: 41414141 01234f08: 01234f0a 01234f0a: c3c3c3c3 // int3 breakpoint1. We set

edi to 01234f00, via the use-after-free bug.

2. mov eax,dword ptr [edi] results in eax being populated with the memory at the address in edi, i.e. 01234f00.

3. push edi pushes 01234f00 to the stack.

4. call dword ptr [eax+8] takes eax (which is 01234f00) and adds 8 to it, giving us 01234f08. It then dereferences that memory address, giving us 01234f0a. Finally, it calls 01234f0a.

5. The data at 01234f0a is treated as an instruction. c3 translates to an int3, which causes the debugger to raise a breakpoint. We’ve executed code!

This allows us to control eip, so we can modify program flow to our own shellcode, or to a ROP chain.

Please keep in mind that the above is just an example, and in reality there are many other challenges in exploiting this bug. It’s a pretty standard use-after-free, but the nature of JavaScript makes for some interesting timing and heap-spraying tricks, and DEP forces us to use ROP to gain an executable memory block.

Liked this question of the week? Interested in reading it or adding an answer? See the question in full. Have questions of a security nature of your own? Security expert and want to help others? Come and join us at security.stackexchange.com.

Filed under Attack, Question of the Week