Author Archive

Stump the Chump with Auditd 01

ServerFault user ewwhite describes a rather interesting situation regarding application distribution wherein code must be compiled in production. In short he wants to keep track of changes to a specific directory path and send alerts via email.

Let’s assume that there already exists some basic form of auditd in play, so as such we’ll be building out a snippet to be inserted into your existing /etc/audit/audit.rules. Ed was sparse on some of the specifics related to the application, understandably so, so let’s make some additional assumptions. Let’s assume that the source code directory in question is “/opt/application/src” and that all binaries are installed into “/opt/application/bin“.

-w /opt/application/src -p w -k app-policy -w /opt/application/bin -p wa -k app-policyI’ve decided to process each directory, source and binaries, separately. The commonality between the two are the ‘-w’ and the ‘-k’ options. The ‘-w’ option says to watch that directory recursively. The ‘-k’ option says to add the text “app-policy” to the output log message, this is just to make log reviews easier. The ‘-p’ option is actually where the magic happens, and is the real reason to separate these two rules out.

As we discussed in the previous post, nearly 8 months ago, the option ‘-p w’ instructs the kernel to log on file writes. One would assume this is accomplished by attaching to the POSIX system call write. That syscall, however, gets called quite a lot when files are actually saved. So as to not overwhelm the logging system auditd instead attaches to the open system call. By using the (w)rite argument we look for any instance of open that uses the O_WRONLY or O_RDWR flags. It’s worth noting that this does not mean a file was actually modified, only that it was opened in such a way that would allow for modification. For example, if a user opened “/opt/application/src/app.h” in a text editor a log would be generated, however if it was written to the terminal using cat or read using less then no log would be generated. This is pretty important to remember as many people will read a file using a text editor and simply exit without saving changes (hopefully).

We also want to watch for file writes in the binary directory except here we would expect them to be more reliable. It would be rather unusual, but not out of the question, for someone to attempt to use a text editor to open an executable. In addition we added the (a)ttribute option. This will alert us if any of the ownership or access permissions change, most importantly if a file is changed to be executable or the ownership is changed. This will not catch SELinux context changes but since SELinux uses the auditd logging engine then those changes will show up in the same log file.

Now that we have the rules constructed we can move on to the alerting. Ed wanted the events to be emailed out. This is actually quite a bit more complicated. By default auditd uses it’s own built in logger. This does make some sense when you consider the type of logging this system is intended to perform. By not relying on an external logger, like syslogd or rsyslog, it can better suffer mistaken configurations. On the downside it makes alternate logging setups trickier. There does exist a subsystem called ‘audispd’ that acts as a log multiplexor. There are a number of output plugins available, such as syslog, UNIX socket, prelude IDS, etc. None of them really do what Ed wants, so I think our best bet would run reports. Auditd is, after all, an auditing tool and not an enforcement tool. So let’s look at something a little different.

Remember how we tacked on ‘-k app-policy‘ to those rules above? Now we get to the why. Let’s try running the command:

aureport -k -ts yesterday 00:00:00 -te yesterday 23:59:59We should now see a list of all of the logs that contain keys and occurred yesterday. Let’s look at a concrete example of me editing a file in that directory and the subsequent logs.

root@ node1:~> mkdir -p /opt/application/src root@ node1:~> vim /opt/application/src/app.h root@ node1:~> aureport -kThe report tells us that at 11:41:29 on September the 24th a user ran the command “/usr/bin/vim” and triggered a rule labeled app-policy. It’s all good so far, but not very detailed. The last two fields, however, are quite useful. The first, 1000, is the UID of my personal account. That is important because notice I was actually running as root. Since I had originally used “sudo -i to gain a root shell my original UID was still preserved, this is good! The last field is a unique event ID generated by auditd. Let’s look at that first event, numbered 13446.

Key Report \=============================================== # date time key success exe auid event \=============================================== 1. 09/24/2013 11:41:29 app-policy yes /usr/bin/vim 1000 13446 2. 09/24/2013 11:41:29 app-policy yes /usr/bin/vim 1000 13445 3. 09/24/2013 11:41:29 app-policy yes /usr/bin/vim 1000 13447 4. 09/24/2013 11:41:29 app-policy yes /usr/bin/vim 1000 13448 5. 09/24/2013 11:41:29 app-policy yes /usr/bin/vim 1000 13449 6. 09/24/2013 11:41:35 app-policy yes /usr/bin/vim 1000 13451 7. 09/24/2013 11:41:35 app-policy yes /usr/bin/vim 1000 13450

root@ node1:~> grep :13446 /var/log/audit/audit.log type=SYSCALL msg=audit(1380037289.364:13446): arch=c000003e syscall=2 success=yes exit=4 a0=bffa20 a1=c2 a2=180 a3=0 items=2 ppid=21950 pid=22277 auid=1000 uid=0 gid=0 euid=0 suid=0 fsuid=0 egid=0 sgid=0 fsgid=0 ses=1226 tty=pts0 comm="vim" exe="/usr/bin/vim" subj=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023 key="app-policy" type=CWD msg=audit(1380037289.364:13446): cwd="/root" type=PATH msg=audit(1380037289.364:13446): item=0 name="/opt/application/src/" inode=2747242 dev=fd:01 mode=040755 ouid=0 ogid=0 rdev=00:00 obj=unconfined_u:object_r:usr_t:s0 type=PATH msg=audit(1380037289.364:13446): item=1 name="/opt/application/src/.app.h.swx" inode=2747244 dev=fd:01 mode=0100600 ouid=0 ogid=0 rdev=00:00 obj=unconfined_u:object_r:usr_t:s0This is what we mean when we say audit logs are verbose. In the introductory blog post we discussed some of those fields so I’ll save us the pain of going over it again. What we can see, however, is that the user with uid 1000 (see auid=1000) ran the command vim as root (see euid=0) and that command resulted in a change to both “/opt/application/src/” and “/opt/application/src/.app.h.swx“.

What we should be able to see here is that the report generated by aureport doesn’t contain everything we need to see what happened, but it does tell us something happened and gives us the information necessary to find the information. In an ideal world you would have some kind of log aggregation system, like Splunk or a SIEM, and send the raw logs there. That system would then have all the alerting functionality built in to alert an admin to to the potential policy violation. However, we don’t live in a perfect world and Ed’s request for email alerts indicate he doesn’t have access to such a system. What I would do is set up a daily cron job to run that report for the previous day. Every morning the log reviewer can check their mailbox and see if any of those files changed when they weren’t supposed to. If daily isn’t reactive enough then we can simply change the values passed to ‘-ts’ and ‘-te’ and run the job more frequently.

Pulling it all together we should have something that looks like this.

#/etc/audit/audit.rules # This file contains the auditctl rules that are loaded # whenever the audit daemon is started via the initscripts. # The rules are simply the parameters that would be passed # to auditctl.

# First rule - delete all -D

# Increase the buffers to survive stress events. # Make this bigger for busy systems -b 320

# Feel free to add below this line. See auditctl man page -w /opt/application/src -p w -k app-policy -w /opt/application/bin -p wa -k app-policy #/etc/cron.d/audit-report MAILTO=ewwhite@example.com

1 0 * * * root /sbin/aureport -k -ts yesterday 00:00:00 -te yesterday 23:59:59

A Brief Introduction to auditd

The auditd subsystem is an access monitoring and accounting for Linux developed and maintained by RedHat. It was designed to integrate pretty tightly with the kernel and watch for interesting system calls. Additionally, likely because of this level of integration and detailed logging, it is used as the logger for SELinux.

All in all, it is a pretty fantastic tool for monitoring what’s happening on your system. Since it operates at the kernel level this gives us a hook into any system operation we want. We have the option to write a log any time a particular system call happens, whether that be unlink or getpid. We can monitor access to any file, all network traffic, really anything we want. The level of detail is pretty phenomenal and, since it operates at such a low level, the granularity of information is incredibly useful.

The biggest downfall is actually a result of the design that makes it so handy. This is itself a logging system and as a result does not use syslog. The good thing here is that it doesn’t have to rely on anything external to operate, so a typo in your (syslog|rsyslog|syslog-ng).conf file won’t result in losing your system audit logs. As a result you’ll have to manage all the audit logging using the auditd suite of tools. This means any kind of log collection, organization, or archiving may not work with these files, including remote logging. As an aside, auditd does have provisions for remote logging, however they are not as trivial as we’ve come to expect from syslog.

Thanks to the level of integration that it provides your auditd configurations can be quite complex, but I’ve found that there are primarily only two options you need to know.

- -a exit,always -S <syscall>

- -w <filename>

The first of these generates a log whenever the listed syslog exits, and whenever the listed file is modified. Seems pretty easy right? It certainly can be, but it does require some investigation into what system calls interest you, particularly if you’re not familiar with OS programming or POSIX. Fortunately for us there are some standards that give us some guidance on what to look out for. Let’s take, for example, the Center for Internet Security Red Hat Enterprise Linux 6 Benchmark. The relevant section is “5.2 Configure System Account (auditd)” starting on page 99. There is a large number of interesting examples listed, but for our purposes we’ll whittle those down to a more minimal and assume your /etc/audit/audit.rules looks like this.

# This file contains the auditctl rules that are loaded # whenever the audit daemon is started via the initscripts. # The rules are simply the parameters that would be passed # to auditctl. # First rule - delete all -DBased on our earlier discussion we should be able to see that we generate a log message every time any of the following system calls exit: adjtimex, settimeofday, stime, clock_settime, sethostname, setdomainname. This will let us know whenever the time gets changed or if the host or domain name of the system get changed.

# Increase the buffers to survive stress events. # Make this bigger for busy systems -b 1024 -a always,exit -S adjtimex -S settimeofday -S stime -k time-change -a always,exit -S clock_settime -k time-change -a always,exit -S sethostname -S setdomainname -k system-locale -w /etc/group -p wa -k identity -w /etc/passwd -p wa -k identity -w /etc/shadow -p wa -k identity -w /etc/sudoers -p wa -k identity -w /var/run/utmp -p wa -k session -w /var/log/wtmp -p wa -k session -w /var/log/btmp -p wa -k session -w /etc/selinux/ -p wa -k MAC-policy # Disable adding any additional rules - note that adding new rules will require a reboot -e 2

We’re also watching a few files. The first four (group, passwd, shadow, sudo) will let us know whenever users get added, modified, or privileges changed. The next three files (utmp, wtmp, btmp) store the current login state of each user, login/logout history, and failed login attempts respectively. So monitoring these will let us know any time an account is used, or failed login attempt, or more specifically whenever these files get changed which will include malicious covering of tracks. Lastly, we’re watching the directory ‘/etc/selinux/’. Directories are a special case in that this will cause the system to recursively monitor the files in that directory. There is a special caveat that you cannot watch ‘/’.

When watching files we also added the option ‘-p wa’. This tells auditd to only watch for (w)rites or (a)ttribute changes. It should be noted that for write (and read for that matter) we aren’t actually logging on those system calls. Instead we’re logging on ‘open’ if the appropriate flags are set.

It should also be said that the logs are also rather…complete. As an example I added the system call rule for sethostname to a Fedora 17 system, with audit version 2.2.1. This is the resultant log from running “hostname audit-test.home.private” as root.

type=SYSCALL msg=audit(1358306046.744:260): arch=c000003e syscall=170 success=yes exit=0 a0=2025010 a1=17 a2=7 a3=18 items=0 ppid=23922 pid=26742 auid=1000 uid=0 gid=0 euid=0 suid=0 fsuid=0 egid=0 sgid=0 fsgid=0 tty=pts4 ses=16 comm="hostname" exe="/usr/bin/hostname" subj=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023 key="system-locale"There are gobs of fields listed, however the ones that interest me the most are the various field names containing the letters “id”, “exe” and that ugly string of numbers in the first parens. The first bit, 1358306046.744, is the timestamp of the event in epoch time. The exe field contains the full path tot he binary that was executed. Useful, since we know what was run, but it does not contain the full command line including arguments. Not ideal.

Next we see that the command was run by root, since the euid is 0. Interestingly, the field auid (called audit uid) contains 1000, which is the uid of my regular user account on that host. The auid field actually contains the user id of the original logged in user for this login session. This means, that even though I used “su -” to gain a root shell the auditing subsystem still knows who I am. Using su to gain a root shell has always been the bane of account auditing, but the auditd system records information to usefully identify a user. It does not forgive the lack of command line options, but certainly makes me feel better about it.

These examples, while handy, are also only the tip of the iceberg. One would be hard pressed to find a way to get more detailed audit logging than is available here. To help make our way down the rabbit hole of auditd let’s turn this into a series. We’ll collect ideas for use cases and work up an audit config to meet the requirements, much like what I ended up doing on this security.stackexchange.com answer.

If this sounds like fun let me know in the comments and I’ll work up a way to collect the information. Until then…Happy Auditing!

There will be future auditd posts, so check back regularly on the auditd tag.

QotW #35: Dealing with excessive “Carding” attempts

Community moderator Jeff Ferland nominated this week’s question: Dealing with excessive “Carding” attempts.

I found this to be an interesting question for two reasons,

- It turned the classic password brute force on its head by applying it to credit cards

- It attracted the attention from a large number of relatively new users

Jeff Ferland postulated that the website was too helpful with its error codes and recommended returning the same “Transaction Failed” message no matter the error.

User w.c suggested using some kind of additional verification like a CAPTCHA. Also mentioned was the notion of instituting time delays for multiple successive CAPTCHA or transaction failures.

A slightly different tack was discussed by GdD. Instead of suggesting specific mitigations, GdD pointed out the inevitability of the attackers adapting to whatever protections are put in place. The recommendation was to make sure that you keep adapting in turn and force the attackers into your cat and mouse game.

Ajacian81 felt that the attacker’s purpose may not be finding valid numbers at all and instead be performing a payment processing denial of service. The suggested fix was to randomize the name of the input fields in an effort to prevent scripting the site.

John Deters described a company that had previously had the same problem. They completely transferred the problem to their processor by having them automatically decline all charges below a certain threshold. He also pointed out that the FBI may be interested in the situation and should be notified. This, of course, depends on USA jurisdiction.

Both ddyer and Winston Ewert suggested different ways of instituting artificial delays into the processing. Winston discussed outright delaying all transactions while ddyer discussed automated detection of “suspicious” transactions and blocking further transactions from that host for some time period.

Liked this question of the week? Interested in reading it or adding an answer? See the question in full. Have questions of a security nature of your own? Security expert and want to help others? Come and join us at security.stackexchange.com.

Misadventures with tcpdump Filters

For quite some time I’ve been running into a tricksome situation with tcpdump. While doing analysis I kept running into the situation where none of my filters would work right. For example, let’s presume I have an existing capture file that was taken off a mirrored port. According to the manpage for pcap-filter this command is a syntactically valid construction:

tcpdump -nnr capturefile.pcap host 10.10.15.15

It does not, however, produce any output. I can verify that traffic exists for that host by doing:

tcpdump -nnr capturefile.pcap | grep 10.10.15.15

This does in fact produce the results I want, but is a pretty unfortunate work-around. Part of what makes a tool like tcpdump so useful is the highly complex filtering language available.

I finally sucked up my ego and asked some of my fellows in the security.stackexchange chat room, The DMZ. While our conversation wasn’t strictly helpful, since they seemed just as puzzled as me, talking out the problem did help me come up with some better google search terms.

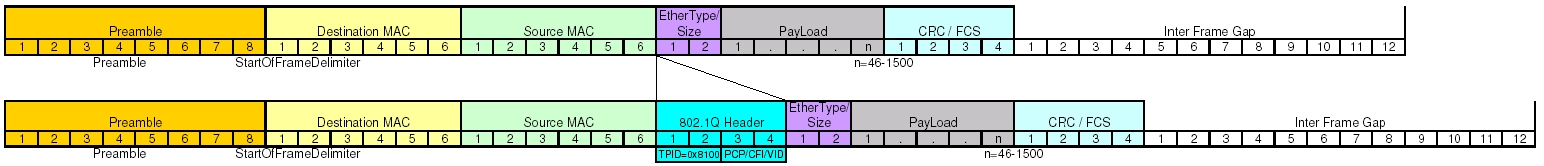

I discovered the problem is entirely to do with 802.1q tagged packets. Since this pcap was taken from a mirrored port of a switch using VLANs it follows all the same rules as a trunked interface. So what that means is that my above filter gets translated as, “Look in the source and destination address fields of the IP header of this standard packet.” Anyone who has had to decode packets, or parse our network traffic, should probably assume that while parsing can be tricky, this lookup shouldn’t be very difficult. I definitely fell into the same boat and boy was I wrong.

My first assumption was that when applying a BPF to a packet capture the following order of events occurred:

- Read in packet

- Parse packet into identifiable tokens

- Check filter strings against tokenized packet

As it turns out, this isn’t what happens at all. Our simple filter above is really just a macro. The host macro will parse the packet, but it is a rather simple parser. By and large this is good, since we want the filters to be fast. In some situations this is bad. For the purposes of discussion let’s make two assumptions:

- The host macro is nothing more than “src host ip or dst host ip”

- The src and dst macros are nothing more than “src = 13th-16th bytes of IP header” and “dst = 17th-20th bytes of IP header”

While the macro language as a whole is really much more complicated, this simplistic view is good enough for this discussion, and in my opinion good enough for normal use.

Now that we’re talking about byte offsets it’s time to pull out our handy dandy header references. Since we’re dealing with IP datagrams embedded in Ethernet frames, let’s take a moment to inspect both headers.

As we can see, the Ethernet frame header is pretty well static, and easily understood. The IP datagram header is variable length, but the options are all at the end of the header, so for our purposes today we can consider it a fixed length as well. This makes our calculations very easy and look something like this.

- This is known to be Ethernet, so add up the length of the header fields (22 bytes) and skip past those.

- Source address in the IP header starts at byte offset 13, so check those 4 bytes.

- Destination address in the IP header starts at byte offset 17, so check those 4 bytes.

So now we’re getting somewhere, and we actually run face first into a surprise. Remember that I said my example packet capture was taken off an interface that pass the VLAN information. Take another look at the headers and see if you can identify the field that contains the VLAN tag information. Hint: You won’t because it’s not there.

Enabling VLANs actually do something interesting to your Ethernet frame header. It adds a few extra fields to your header to a total of 4 bytes. In most cases you won’t see this. Generally each switch port has two modes, access and trunk. An access port is one that you would hand out to a user. This will get connected directly to a computer or a standard unmanaged mini-switch. A trunk port is extra special and is often only used either to connect the networking infrastructure or a server that needs to access several different networks. The extra VLAN header information is only useful on over a trunk, and as such is stripped out before the frame is transmitted on an access port. So on an access port, that header doesn’t exist, so your dumb byte offset math works pretty well. Remember at the beginning when I said mirrored ports followed many of the same rules as a trunk? This is where we begin to see it. Let’s now take a look at what happens to the Ethernet frame header when we add in the VLAN tag information.

Knowing that we were doing some dumb parsing by counting byte offsets, and all of our numbers were based on an Ethernet frame without the VLAN information, we should finally begin to understand our problem. We are dealing with an off-by-4 byte error. According to our IP header quick reference we can do a quick offset calculation and see that we’re attempting to compare the source address against the combination of TimeToLive+Type+HeaderChecksum and attempting to compare the destination address against the source address.

You should be thinking, “Now Scott, yes, this a problem, but we’ll still see half of the communication because when we check for destination address we’ll still end up matching against source!” You would be absolutely correct, except for one problem. As I mentioned before the filter isn’t completely as dumb as we’re pretending that it is. The base assumption for BPF is that when you say host, you’re talking about an IP address. So the filter does actually check the version field to see if the packet is IPv4 or IPv6, values 4 or 6 respectively. The IP version field is the higher order nibble of the first byte. Since we have an off-by-4 byte situation what value are we actually checking? The answer is the higher order nibble of the third byte in the VLAN header. This byte contains the 3 bit PCP field and the 1 bit CFI flag. The 3 bit PCP is actually the 802.1p service priority used in Quality of Service systems.

In most cases 802.1p is unused, which means a QoS of 0, which means those 3 bits are unset. The 1 bit CFI flag, also called Drop Eligible or DE, is used by PCP to say that in the presence of QoS based congestion this packet can be dropped. Since 802.1p is generally not used, this field is also typically 0. In normal situations out filter reads the 0, which is neither a 4 nor a 6, and so our filter automatically rejects. However, since the priority and DE fields are set by QoS systems we could have a situation where the filter accidentally works. If ever 802.1p based QoS is used, the DE flag is unset, and the priority is set to 2 on a scale of 0 (best effort) to 7 (highest) the filter will still believe we’re inspecting an IPv4 packet. Or if the priority is set to 3 and the DE flag is unset then the filter will believe we’re looking at an IPv6 packet. This is all a bit of an aside since it has been my experience that QoS is rarely used, however it does present an interesting edge case.

Ignoring any possibility of QoS in play and going back to straight up 802.1q tagged packets what we have to do instead is modify the filter string to tell the BPF to treat tagged packets as tagged, like so:

tcpdump -nnr capturefile.pcap vlan and host 10.10.15.15

What we end up doing here is filtering only for packets containing a VLAN tag and either of the address fields in the IP header contains 10.10.15.15. By explicitly applying the vlan macro the filtering system will properly detect the VLAN header and account for it when processing the other embedded protocols. It is worth noting that this will only match on packets that contain the VLAN header. If you want to generalize your filter, say you don’t know or your capture contains a mix of packets that may or may not have a VLAN tag, you can complicate your filter to do something like this.

tcpdump -nnr capturefile.pcap 'host 10.10.15.15 or ( vlan and host 10.10.15.15 )'

Finding out that VLANs are used on networks that you’re dealing with, and if the infrastructure is any more complicated than a 10 person office it probably does, has some pretty far reaching consequences. Any time one applies pcap filters to a capture you’ll need to take into account 802.11q tags. You’ll definitely want to keep this in mind when using BPF files to distribute load across multiple snort processes or when using BPFs to do targeted analysis using tools like Argus. Depending on the configuration of your interface, your monitoring port may actually have a native vlan. If that is the case you’ll find that you do receive data, which may disguise the fact that you’re not receiving all of the data.

QotW #24: Why do people tell me not to use VLANs for security?

This week’s question of the week was asked by user jtnire who was asking a question very near and dear to those security professionals who came out of networking or systems backgrounds. He was doing some network design and came across a classic statement that, “VLANs are Not a Security Tool”.

As of this writing, jtnire had not accepted any answers, however user Rory McCune was leading the pack of answers. Rory focused primarily on the classic human problem of misconfiguration, particularly easy when we’re talking about typing Gi/0/4 when you meant Gi/1/4, as opposed to plugging a cable into the wrong port. He also specifically called out VLAN Hopping, which can abuse a misconfiguration to allow a malicious user access to a non-authorized VLAN.

User and moderator Rory Alsop, speaking from an audit perspective, expanded on what the other Rory mentioned and focused more generally on what would make him double-take. He pointed out that VLANs are generally used for cheap network segmentation and that if you’re using them for as a security tool, then you probably want to do it right and use a physically isolated network instead.

Jakob Borg came in with a completely different approach. He explained that, as an ISP, VLANs are a crucial component of their environment and when done right can be a very powerful tool from both a security and service prospective. User jliendo largely agreed with Jakob that configuration is king, and when configured properly is an excellent tool in your security arsenal. He also went into more technical detail about some of the possible attacks against VLANs and how they can be mitigated.

In this author’s opinion this is a fantastic question as VLANs are becoming an extremely common mechanism for network isolation. The answers also did a great job of coming at the problem from all manner of angles, from external auditors to in the trenches technicians.

Liked this question of the week? Interested in reading it or adding an answer? See the question in full. Have questions of a security nature of your own? Security expert and want to help others? Come and join us at security.stackexchange.com.

SSL Chain Cert Fun with Nessus

My workplace recently, for some definitions of recent, switched the company we use for certificate signing to InCommon. There were quite a few technical/administrative advantages, and since we’re educational, price was a big factor. Everyone has been really happy with the results. Well, except for this one thing. InCommon is not a top level trusted CA, they chain through AddTrust. This isn’t actually all that big a deal, really, as AddTrust is a common CA to have in your trusted bundle, and all we had to do was configure the InCommon chain certificate on our web servers. Other than the occasional chain breakage on some mobile browsers everything seemed peachy. Except, that is, when we ran a vulnerability scan.

Shortly after we switched we started noticing some odd alerts coming out of our vulnerability scans. At first one or two were reporting that the SSL certificate could not be validated. We manually verified the certificates, declared them as false positives, and moved on. Over time more and more systems started reporting this error. Eventually the problem had propagated out far enough that I started digging into it. For reference, the PluginID we’re looking at here is 51192.

I learned two very important, and relevant, pieces of information that day:

- Nessus was not properly validating the chain.

- Chain Certificate files are a little stranger than expected.

Instead of using a system default CA bundle, Nessus ships with its own. You can find the bundle, called known_CA.inc, in the plugin directory. So on Linux systems you should be looking at /opt/nessus/lib/nessus/plugins/known_CA.inc. If you are using a Windows scanner, well, you’re on your own. This is a fairly standard looking CA bundle, and I found that AddTrust was, in fact, included. I did not, however, find any reference to InCommon. Since they are somehow related to Internet2 I looked for them, also no luck.

This isn’t really that big a deal, though. Nessus will also look for, but will not update, a secondary bundle called custom_CA.inc. In most cases, this file would be used to include a local CA, for instance in a closed corporate network where one generates self-signed certificates as a matter of course. However, since you can use it to include arbitrary CA certs we can use it to fix our problem.

It’s easy enough for me to get the intermediate cert, what with it being public and all. This is where things started to get a little weird, though. In order to stay consistent with the known_CA.inc I included the certificate as a decoded X.509+PEM. Placing only the intermediate cert in this file resulted in, again, the certificate chain failing to validate. Next, what follows is a Nessus debugging tip that was roughly an hour’s worth of swearing in the discovering:

If you don’t think the web interface is showing you sufficient information, look at the plugin output in the raw XML.

You can get this by either exporting the report, or by finding it in the user’s reports folder on the scanner. What I discovered was that all of the various and sundry certificates were being read and validated. The chain, however, was being checked in the wrong order, in this case: webserver->AddTrust->InCommon.

After a little more trial and error I learned that, not only, did I need to have both the InCommon intermediate, but also the AddTrust certificates in my custom_CA.inc file, but that the order of the certs in the file also mattered. As it happens, AddTrust had to be entered first, followed by InCommon. This does make some amount of sense, when I adjusted my thought process to an actual chain where AddTrust was the “top-level”.

For completeness, I copied the newly complete custom_CA.inc file to my test webserver and included it as a chain cert using the SSLCertificateChainFile option. This is Apache httpd on Linux, you nginx or IIS folks are on your own. After removing the custom_CA.inc on the Nessus scanner and re-running the scan resulted in the certificate properly validating.

This left me in a good place in two ways:

- I now had a properly formatted custom_CA.inc file that I could put into puppet for all the scanners.

- I now also had a properly formatted chain cert file for inclusion on the web servers.

This fixes the problem from both sides, the server presenting all the correct information, as well as the scanner for cleaning up a false positive. For reference, included below is the chain cert file that was generated. As mentioned previously, it is the same format as a CA bundle. For each certificate you’ll find the ASCII text decoded certificate information, followed by the Base64 encoded PEM version of the same certificate. In my testing, Nessus would accept only the PEM versions, however I wanted to include both outputs since it appears to be the standard.

Certificate: Data: Version: 3 (0x2) Serial Number: 7f:71:c1:d3:a2:26:b0:d2:b1:13:f3:e6:81:67:64:3e Signature Algorithm: sha1WithRSAEncryption Issuer: C=SE, O=AddTrust AB, OU=AddTrust External TTP Network, CN=AddTrust External CA Root Validity Not Before: Dec 7 00:00:00 2010 GMT Not After : May 30 10:48:38 2020 GMT Subject: C=US, O=Internet2, OU=InCommon, CN=InCommon Server CA Subject Public Key Info: Public Key Algorithm: rsaEncryption Public-Key: (2048 bit) Modulus: 00:97:7c:c7:c8:fe:b3:e9:20:6a:a3:a4:4f:8e:8e: 34:56:06:b3:7a:6c:aa:10:9b:48:61:2b:36:90:69: e3:34:0a:47:a7:bb:7b:de:aa:6a:fb:eb:82:95:8f: ca:1d:7f:af:75:a6:a8:4c:da:20:67:61:1a:0d:86: c1:ca:c1:87:af:ac:4e:e4:de:62:1b:2f:9d:b1:98: af:c6:01:fb:17:70:db:ac:14:59:ec:6f:3f:33:7f: a6:98:0b:e4:e2:38:af:f5:7f:85:6d:0e:74:04:9d: f6:27:86:c7:9b:8f:e7:71:2a:08:f4:03:02:40:63: 24:7d:40:57:8f:54:e0:54:7e:b6:13:48:61:f1:de: ce:0e:bd:b6:fa:4d:98:b2:d9:0d:8d:79:a6:e0:aa: cd:0c:91:9a:a5:df:ab:73:bb:ca:14:78:5c:47:29: a1:ca:c5:ba:9f:c7:da:60:f7:ff:e7:7f:f2:d9:da: a1:2d:0f:49:16:a7:d3:00:92:cf:8a:47:d9:4d:f8: d5:95:66:d3:74:f9:80:63:00:4f:4c:84:16:1f:b3: f5:24:1f:a1:4e:de:e8:95:d6:b2:0b:09:8b:2c:6b: c7:5c:2f:8c:63:c9:99:cb:52:b1:62:7b:73:01:62: 7f:63:6c:d8:68:a0:ee:6a:a8:8d:1f:29:f3:d0:18: ac:ad Exponent: 65537 (0x10001) X509v3 extensions: X509v3 Authority Key Identifier: keyid:AD:BD:98:7A:34:B4:26:F7:FA:C4:26:54:EF:03:BD:E0:24:CB:54:1A

X509v3 Subject Key Identifier: 48:4F:5A:FA:2F:4A:9A:5E:E0:50:F3:6B:7B:55:A5:DE:F5:BE:34:5D X509v3 Key Usage: critical Certificate Sign, CRL Sign X509v3 Basic Constraints: critical CA:TRUE, pathlen:0 X509v3 Certificate Policies: Policy: X509v3 Any Policy

X509v3 CRL Distribution Points:

Full Name: URI:http://crl.usertrust.com/AddTrustExternalCARoot.crl

Authority Information Access: CA Issuers - URI:http://crt.usertrust.com/AddTrustExternalCARoot.p7c CA Issuers - URI:http://crt.usertrust.com/AddTrustUTNSGCCA.crt OCSP - URI:http://ocsp.usertrust.com

Signature Algorithm: sha1WithRSAEncryption 93:66:21:80:74:45:85:4b:c2:ab:ce:32:b0:29:fe:dd:df:d6: 24:5b:bf:03:6a:6f:50:3e:0e:1b:b3:0d:88:a3:5b:ee:c4:a4: 12:3b:56:ef:06:7f:cf:7f:21:95:56:3b:41:31:fe:e1:aa:93: d2:95:f3:95:0d:3c:47:ab:ca:5c:26:ad:3e:f1:f9:8c:34:6e: 11:be:f4:67:e3:02:49:f9:a6:7c:7b:64:25:dd:17:46:f2:50: e3:e3:0a:21:3a:49:24:cd:c6:84:65:68:67:68:b0:45:2d:47: 99:cd:9c:ab:86:29:11:72:dc:d6:9c:36:43:74:f3:d4:97:9e: 56:a0:fe:5f:40:58:d2:d5:d7:7e:7c:c5:8e:1a:b2:04:5c:92: 66:0e:85:ad:2e:06:ce:c8:a3:d8:eb:14:27:91:de:cf:17:30: 81:53:b6:66:12:ad:37:e4:f5:ef:96:5c:20:0e:36:e9:ac:62: 7d:19:81:8a:f5:90:61:a6:49:ab:ce:3c:df:e6:ca:64:ee:82: 65:39:45:95:16:ba:41:06:00:98:ba:0c:56:61:e4:c6:c6:86: 01:cf:66:a9:22:29:02:d6:3d:cf:c4:2a:8d:99:de:fb:09:14: 9e:0e:d1:d5:c6:d7:81:dd:ad:24:ab:ac:07:05:e2:1d:68:c3: 70:66:5f:d3 -----BEGIN CERTIFICATE----- MIIEwzCCA6ugAwIBAgIQf3HB06ImsNKxE/PmgWdkPjANBgkqhkiG9w0BAQUFADBv MQswCQYDVQQGEwJTRTEUMBIGA1UEChMLQWRkVHJ1c3QgQUIxJjAkBgNVBAsTHUFk ZFRydXN0IEV4dGVybmFsIFRUUCBOZXR3b3JrMSIwIAYDVQQDExlBZGRUcnVzdCBF eHRlcm5hbCBDQSBSb290MB4XDTEwMTIwNzAwMDAwMFoXDTIwMDUzMDEwNDgzOFow UTELMAkGA1UEBhMCVVMxEjAQBgNVBAoTCUludGVybmV0MjERMA8GA1UECxMISW5D b21tb24xGzAZBgNVBAMTEkluQ29tbW9uIFNlcnZlciBDQTCCASIwDQYJKoZIhvcN AQEBBQADggEPADCCAQoCggEBAJd8x8j+s+kgaqOkT46ONFYGs3psqhCbSGErNpBp 4zQKR6e7e96qavvrgpWPyh1/r3WmqEzaIGdhGg2GwcrBh6+sTuTeYhsvnbGYr8YB +xdw26wUWexvPzN/ppgL5OI4r/V/hW0OdASd9ieGx5uP53EqCPQDAkBjJH1AV49U 4FR+thNIYfHezg69tvpNmLLZDY15puCqzQyRmqXfq3O7yhR4XEcpocrFup/H2mD3 /+d/8tnaoS0PSRan0wCSz4pH2U341ZVm03T5gGMAT0yEFh+z9SQfoU7e6JXWsgsJ iyxrx1wvjGPJmctSsWJ7cwFif2Ns2Gig7mqojR8p89AYrK0CAwEAAaOCAXcwggFz MB8GA1UdIwQYMBaAFK29mHo0tCb3+sQmVO8DveAky1QaMB0GA1UdDgQWBBRIT1r6 L0qaXuBQ82t7VaXe9b40XTAOBgNVHQ8BAf8EBAMCAQYwEgYDVR0TAQH/BAgwBgEB /wIBADARBgNVHSAECjAIMAYGBFUdIAAwRAYDVR0fBD0wOzA5oDegNYYzaHR0cDov L2NybC51c2VydHJ1c3QuY29tL0FkZFRydXN0RXh0ZXJuYWxDQVJvb3QuY3JsMIGz BggrBgEFBQcBAQSBpjCBozA/BggrBgEFBQcwAoYzaHR0cDovL2NydC51c2VydHJ1 c3QuY29tL0FkZFRydXN0RXh0ZXJuYWxDQVJvb3QucDdjMDkGCCsGAQUFBzAChi1o dHRwOi8vY3J0LnVzZXJ0cnVzdC5jb20vQWRkVHJ1c3RVVE5TR0NDQS5jcnQwJQYI KwYBBQUHMAGGGWh0dHA6Ly9vY3NwLnVzZXJ0cnVzdC5jb20wDQYJKoZIhvcNAQEF BQADggEBAJNmIYB0RYVLwqvOMrAp/t3f1iRbvwNqb1A+DhuzDYijW+7EpBI7Vu8G f89/IZVWO0Ex/uGqk9KV85UNPEerylwmrT7x+Yw0bhG+9GfjAkn5pnx7ZCXdF0by UOPjCiE6SSTNxoRlaGdosEUtR5nNnKuGKRFy3NacNkN089SXnlag/l9AWNLV1358 xY4asgRckmYOha0uBs7Io9jrFCeR3s8XMIFTtmYSrTfk9e+WXCAONumsYn0ZgYr1 kGGmSavOPN/mymTugmU5RZUWukEGAJi6DFZh5MbGhgHPZqkiKQLWPc/EKo2Z3vsJ FJ4O0dXG14HdrSSrrAcF4h1ow3BmX9M= -----END CERTIFICATE----- Certificate: Data: Version: 3 (0x2) Serial Number: 1 (0x1) Signature Algorithm: sha1WithRSAEncryption Issuer: C=SE, O=AddTrust AB, OU=AddTrust External TTP Network, CN=AddTrust External CA Root Validity Not Before: May 30 10:48:38 2000 GMT Not After : May 30 10:48:38 2020 GMT Subject: C=SE, O=AddTrust AB, OU=AddTrust External TTP Network, CN=AddTrust External CA Root Subject Public Key Info: Public Key Algorithm: rsaEncryption Public-Key: (2048 bit) Modulus: 00:b7:f7:1a:33:e6:f2:00:04:2d:39:e0:4e:5b:ed: 1f:bc:6c:0f:cd:b5:fa:23:b6:ce:de:9b:11:33:97: a4:29:4c:7d:93:9f:bd:4a:bc:93:ed:03:1a:e3:8f: cf:e5:6d:50:5a:d6:97:29:94:5a:80:b0:49:7a:db: 2e:95:fd:b8:ca:bf:37:38:2d:1e:3e:91:41:ad:70: 56:c7:f0:4f:3f:e8:32:9e:74:ca:c8:90:54:e9:c6: 5f:0f:78:9d:9a:40:3c:0e:ac:61:aa:5e:14:8f:9e: 87:a1:6a:50:dc:d7:9a:4e:af:05:b3:a6:71:94:9c: 71:b3:50:60:0a:c7:13:9d:38:07:86:02:a8:e9:a8: 69:26:18:90:ab:4c:b0:4f:23:ab:3a:4f:84:d8:df: ce:9f:e1:69:6f:bb:d7:42:d7:6b:44:e4:c7:ad:ee: 6d:41:5f:72:5a:71:08:37:b3:79:65:a4:59:a0:94: 37:f7:00:2f:0d:c2:92:72:da:d0:38:72:db:14:a8: 45:c4:5d:2a:7d:b7:b4:d6:c4:ee:ac:cd:13:44:b7: c9:2b:dd:43:00:25:fa:61:b9:69:6a:58:23:11:b7: a7:33:8f:56:75:59:f5:cd:29:d7:46:b7:0a:2b:65: b6:d3:42:6f:15:b2:b8:7b:fb:ef:e9:5d:53:d5:34: 5a:27 Exponent: 65537 (0x10001) X509v3 extensions: X509v3 Subject Key Identifier: AD:BD:98:7A:34:B4:26:F7:FA:C4:26:54:EF:03:BD:E0:24:CB:54:1A X509v3 Key Usage: Certificate Sign, CRL Sign X509v3 Basic Constraints: critical CA:TRUE X509v3 Authority Key Identifier: keyid:AD:BD:98:7A:34:B4:26:F7:FA:C4:26:54:EF:03:BD:E0:24:CB:54:1A DirName:/C=SE/O=AddTrust AB/OU=AddTrust External TTP Network/CN=AddTrust External CA Root serial:01

Signature Algorithm: sha1WithRSAEncryption b0:9b:e0:85:25:c2:d6:23:e2:0f:96:06:92:9d:41:98:9c:d9: 84:79:81:d9:1e:5b:14:07:23:36:65:8f:b0:d8:77:bb:ac:41: 6c:47:60:83:51:b0:f9:32:3d:e7:fc:f6:26:13:c7:80:16:a5: bf:5a:fc:87:cf:78:79:89:21:9a:e2:4c:07:0a:86:35:bc:f2: de:51:c4:d2:96:b7:dc:7e:4e:ee:70:fd:1c:39:eb:0c:02:51: 14:2d:8e:bd:16:e0:c1:df:46:75:e7:24:ad:ec:f4:42:b4:85: 93:70:10:67:ba:9d:06:35:4a:18:d3:2b:7a:cc:51:42:a1:7a: 63:d1:e6:bb:a1:c5:2b:c2:36:be:13:0d:e6:bd:63:7e:79:7b: a7:09:0d:40:ab:6a:dd:8f:8a:c3:f6:f6:8c:1a:42:05:51:d4: 45:f5:9f:a7:62:21:68:15:20:43:3c:99:e7:7c:bd:24:d8:a9: 91:17:73:88:3f:56:1b:31:38:18:b4:71:0f:9a:cd:c8:0e:9e: 8e:2e:1b:e1:8c:98:83:cb:1f:31:f1:44:4c:c6:04:73:49:76: 60:0f:c7:f8:bd:17:80:6b:2e:e9:cc:4c:0e:5a:9a:79:0f:20: 0a:2e:d5:9e:63:26:1e:55:92:94:d8:82:17:5a:7b:d0:bc:c7: 8f:4e:86:04 -----BEGIN CERTIFICATE----- MIIENjCCAx6gAwIBAgIBATANBgkqhkiG9w0BAQUFADBvMQswCQYDVQQGEwJTRTEU MBIGA1UEChMLQWRkVHJ1c3QgQUIxJjAkBgNVBAsTHUFkZFRydXN0IEV4dGVybmFs IFRUUCBOZXR3b3JrMSIwIAYDVQQDExlBZGRUcnVzdCBFeHRlcm5hbCBDQSBSb290 MB4XDTAwMDUzMDEwNDgzOFoXDTIwMDUzMDEwNDgzOFowbzELMAkGA1UEBhMCU0Ux FDASBgNVBAoTC0FkZFRydXN0IEFCMSYwJAYDVQQLEx1BZGRUcnVzdCBFeHRlcm5h bCBUVFAgTmV0d29yazEiMCAGA1UEAxMZQWRkVHJ1c3QgRXh0ZXJuYWwgQ0EgUm9v dDCCASIwDQYJKoZIhvcNAQEBBQADggEPADCCAQoCggEBALf3GjPm8gAELTngTlvt H7xsD821+iO2zt6bETOXpClMfZOfvUq8k+0DGuOPz+VtUFrWlymUWoCwSXrbLpX9 uMq/NzgtHj6RQa1wVsfwTz/oMp50ysiQVOnGXw94nZpAPA6sYapeFI+eh6FqUNzX mk6vBbOmcZSccbNQYArHE504B4YCqOmoaSYYkKtMsE8jqzpPhNjfzp/haW+710LX a0Tkx63ubUFfclpxCDezeWWkWaCUN/cALw3CknLa0Dhy2xSoRcRdKn23tNbE7qzN E0S3ySvdQwAl+mG5aWpYIxG3pzOPVnVZ9c0p10a3CitlttNCbxWyuHv77+ldU9U0 WicCAwEAAaOB3DCB2TAdBgNVHQ4EFgQUrb2YejS0Jvf6xCZU7wO94CTLVBowCwYD VR0PBAQDAgEGMA8GA1UdEwEB/wQFMAMBAf8wgZkGA1UdIwSBkTCBjoAUrb2YejS0 Jvf6xCZU7wO94CTLVBqhc6RxMG8xCzAJBgNVBAYTAlNFMRQwEgYDVQQKEwtBZGRU cnVzdCBBQjEmMCQGA1UECxMdQWRkVHJ1c3QgRXh0ZXJuYWwgVFRQIE5ldHdvcmsx IjAgBgNVBAMTGUFkZFRydXN0IEV4dGVybmFsIENBIFJvb3SCAQEwDQYJKoZIhvcN AQEFBQADggEBALCb4IUlwtYj4g+WBpKdQZic2YR5gdkeWxQHIzZlj7DYd7usQWxH YINRsPkyPef89iYTx4AWpb9a/IfPeHmJIZriTAcKhjW88t5RxNKWt9x+Tu5w/Rw5 6wwCURQtjr0W4MHfRnXnJK3s9EK0hZNwEGe6nQY1ShjTK3rMUUKhemPR5ruhxSvC Nr4TDea9Y355e6cJDUCrat2PisP29owaQgVR1EX1n6diIWgVIEM8med8vSTYqZEX c4g/VhsxOBi0cQ+azcgOno4uG+GMmIPLHzHxREzGBHNJdmAPx/i9F4BrLunMTA5a mnkPIAou1Z5jJh5VkpTYghdae9C8x49OhgQ= -----END CERTIFICATE-----

Base Rulesets in IPTables

This question on Security.SE made me think in a rather devious way. At first, I found it to be rather poorly worded, imprecise, and potentially not worth salvaging. After a couple of days I started to realize exactly how many times I’ve really been asked this question by well intentioned, and often, knowledgeable people. The real question should be, “Is there a recommended set of firewall rules that can be used as a standard config?” Or more plainly, I have a bunch of systems, so what rules should they all have no matter what services they provide. Now that is a question that can reasonably be answered, and what I’ve typically given is this:

-A INPUT -i lo -j ACCEPT -A INPUT -p icmp --icmp-type any -j ACCEPT # Force SYN checks -A INPUT -p tcp ! --syn -m state --state NEW -j DROP # Drop all fragments -A INPUT -f -j DROP # Drop XMAS packets -A INPUT -p tcp --tcp-flags ALL ALL -j DROP # Drop NULL packets -A INPUT -p tcp --tcp-flags ALL NONE -j DROP -A INPUT -m state --state ESTABLISHED,RELATED -j ACCEPTThe first and last lines should be pretty obvious so I won’t go into too much detail. Enough services use loopback that, except in very restrictive environments, attempting to firewall them will almost definitely be more work than is useful. Similarly, the ESTABLISHED keyword allows return traffic for outgoing connections. The RELATED keyword takes care of things like FTP that use multiple ports and may trigger multiple flows that are not part of the same connection, but are none-the-less dependent on each other. In a perfect world these would be at the top for performance reasons. Since rules are processed in order we really want the fewest number of rules processed as possible, however in order to get the full benefit from the above rule set, we want to run as many packets as possible by them.

The allow all on ICMP is probably the most controversial part of this set. While there are reconnaissance concerns with ICMP, the network infrastructure is designed with the assumption that ICMP is passed. You’re better off allowing it (at least within your organization’s network space), or grokking all of the ICMP messages and determining your own balance. Look at this question for some worthwhile discussion on the matter.

Now, down to the brass tacks. Let’s look at each of the wonky rules in order.

Forcing SYN Checks

-A INPUT -p tcp ! --syn -m state --state NEW -j DROPThis rule performs two checks:

- Is the SYN bit NOT set, and

- Is this packet NOT part of a connection in the state table

If both conditions match, then the packet gets dropped. This is a bit of a low-hanging fruit kind of rule. If these conditions match, then we’re looking at a packet that we just downright shouldn’t be interested in. This could indicate a situation where the connection has been pruned from the conntrack state table, or perhaps a malicious replay event. Either way, there isn’t any typical benefit to allowing this traffic, so let’s explicitly block it.

Fragments Be Damned

-A INPUT -f -j DROPThis is an easy one. Drop any packet with the fragment bit set. I fully realize this sounds pretty severe. Networks were designed with the notion of fragmentation, in fact the the IPv4 header specifically contains a flag that indicates whether or not that packet should or should not be fragmented. Considering that this is a core feature of IPv4, fragmentation is still a bit of a touchy subject. If the DF bit is set, then MTU path discovery should just work. However, not all devices respond back with the correct ICMP message. The above rule is one of them, however that’s because ICMP type 3 code 4 (wikipedia) isn’t a reject option in iptables. As a result of this one can’t really know whether or not your packets will get fragmented along the network. Nowadays, on internal networks at least, this usually isn’t a problem. You may run into problems, however, when dealing with VPNs and similar where your 1500 byte ethernet segment suddenly needs to make space for an extra header.

So now that we’ve talked about all the reasons to not drop fragments, here’s the reason to. By default, standard iptables rules are only applied to the packet marked as the first fragment. Meaning, any packet marked as a fragment with an offset of 2 or greater is passed through, the assumption being that if we receive an invalid packet then reassembly will fail and the packets will get dropped anyway. In my experience, fragmentation is a small enough problem that I don’t want to deal with risk and block it anyway. This should only get better with IPv6 as path MTU discovery is placed more firmly on the client and is considered less “Magic.”

Christmas in July

-A INPUT -p tcp --tcp-flags ALL ALL -j DROPNetwork reconnaissance is a big deal. It allows us to get a good feel for what’s out there so that when doing our work we have some indications of what might exist instead of just blindly stabbing in the dark. So called ‘Christmas Tree Packets’ are one of those reconnaissance techniques used by most network scanners. The idea is that for whatever protocol we use, whether TCP/UDP/ICMP/etc, every flag is set and every option is enabled. The notion is that just like a old style indicator board the packet is “lit up like a Christmas tree”. By using a packet like this we can look at the behavior of the responses and make some guesses about what operating system and version the remote host is running. For a White Hat, we can use that information to build out a distribution graph of what types of systems we have, what versions they’re running, where we might want to focus our protections, or who we might need to visit for an upgrade/remediation. For a Black Hat, this information can be used to find areas of the network to focus their attacks on or particularly vulnerable looking systems that they can attempt to exploit. By design some flags are incompatible with each-other and as a result any Christmas Tree Packet is at best a protocol anomaly, and at worst a precursor to malicious activity. In either case, there is normally no compelling reason to accept such packets, so we should drop them just to be safe.

I have read instances of Christmas Tree Packets resulting in Denial of Service situations, particularly with networking gear. The idea being that since so many flags and options are set, the processing complexity, and thus time, is increased. Flood a network with these and watch the router stop processing normal packets. In truth, I do not have experience with this failure scenario.

Nothing to See Here

-A INPUT -p tcp --tcp-flags ALL NONE -j DROPWhen we see a packet where none of the flags or options are set we use the term Null Packet. Just like with the above Christmas Tree Packet, one should not see this on a normal, well behaved network. Also, just as above, they can be used for reconnaissance purposes to try and determine the OS of the remote host.

QotW #2: Configuration Hardening

Question of the Week #2

Unlike last week, where we looked at a specific question @nealmcb recommended the entire hardening tag! This is a well highlighted aspect of Information Security since it is what got many of us into the field. We knew that the factory switch config or default Windows installation wasn’t quite good enough so we tried to figure out exactly what to change in order to make things better.

From the basic Operating System hardening techniques that many of us are familiar with, we as a community have started applying the principles to more and more devices. As of writing this entry we have 19 open questions in that area ranging from What does defense in depth entail for a web app? to Best practices for securing an iPhone.

In my opinion, we’re clearly Getting It ™, as an industry, when we have moved on from Hardening Linux Server and are having real honest discussions about securing iPhones and Best practices for securing an android device.

In addition to the mobile device questions listed above, I think my favorites so far have been:

- Apache Server Hardening (Eric W)

- IIS and SQLServer Hardening (Voulnet)

- What methods are available for securing SSH? (Olivier Lalonde)

- What does defense in depth entail for a web app? (VirtuosiMedia)

While some questions have received a large amount of traffic, such as Apache Server Hardening at 9 answers and over 1000 views, others have managed to slip through with relatively little traffic, like How do I apply a security baseline to 2008 R2? slipping in at 1 answer and >200 views.

Is there a platform you use that you are not sure how to secure? Do you have experience with hardening a particular configuration? Join the party by browsing through the questions in the hardening tag and adding your own fancy tips and tricks. Better yet, ask ask ask! Show us up, or work us hard.